Many context-aware services have been suggested in recent years in attempt to make life simpler.

Despite the fact that the word "context" was coined in 1994, the first context-aware solution in the literature was offered in 1991 [69].

They developed Active Badge, an unique technology for locating individuals in an office setting.

This technology was able to determine the position of users in order to route calls to phones in close proximity to the user.

Because users wore badges that sent signals to a centralized location system, the location of the users was known.

Following that, many other solutions have been given in many fields, such as the one provided by Wood et al. in [70].

They suggested a teleporting system that could make the user's environment accessible from any machine having a Java-enabled web browser.

Users did not need to take any computer platforms with them while utilizing this method, and they could run their programs on any nearby system.

These context-aware systems gave consumers additional options for obtaining personalized services by collecting context information, which was particularly useful in situations where users' mobility was high.

Context-aware solutions, such as car navigation systems, emergency services, and recommender systems, are well-known examples of mobile-friendly solutions.

A current deep analysis on context-aware systems examines a significant number of solutions across a variety of issues [71].

Feel@Home [72], Hydra [73], CroCo [74], SOCAM [75], and CoBrA [76] are examples of systems that enable "security and privacy" characteristics.

Feel@Home was a context-aware framework that allowed for communication across contexts or domains while also taking into account intra- and inter-domain interactions.

Hydra was an IoT-focused ambient intelligence middleware that combined device, semantic, and application contexts to provide context-aware information.

CroCo, on the other hand, was a cross-application context management service for diverse settings, while SOCAM shaped the quality, dependencies, and categorization of context information using a set of ontologies.

This collection was constructed on a common higher ontology that defined ideas across all contexts, as well as domain-specific ontologies that defined concepts inside each one.

CoCA [77] is another similar effort in this area that is not included in the survey reported in [71].

This concept proposed a collaborative context-aware service platform with a neighborhood-based resource sharing mechanism.

By evaluating information about the context and the position of the pieces, CoCA was able to deduce the users' location.

SHERLOCK [78] was a framework for location-based services that employed semantic technologies to assist consumers in selecting the service that best met their requirements in the current situation.

Another IoT-focused solution was Hydra [73].

It was a middleware responsible for providing solutions to wireless devices and sensors in the context of ambient awareness.

It considered a strong reasoning technique for a variety of context sources, such as physical device-based, semantic, and abstract layer-based context sources.

PerDe [79] was a development environment for pervasive computing applications that were tailored to the demands of the user.

It included a domain-specific design language as well as a collection of graphical tools to aid in the creation of ubiquitous applications at various phases.

DiaSuite [80] was another development technique for developing applications in the Sense/Compute/Control (SCC) domain that employed a software design approach.

DiaSuite also included a compiler that generated a specific Java programming framework that guided programmers through the implementation of the different components of the software system.

Semantic rules were used in several of the solutions listed above for various goals.

Hydra, CroCo, SHERLOCK, and SOCAM all employed semantic rules to infer new information about a given context while taking information from other sources into consideration.

Instead, CoCA used semantic rules to govern the ontologies, such as a property being the opposite of another, as well as domain-specific information.

Despite this, none of the four systems employed semantic rules to build policies aimed at safeguarding users' privacy choices.

Any context-aware framework that allows users to dynamically limit or reveal information to others based on their location and privacy choices should support users' privacy.

As a result, the current trend in context-aware systems is to utilize rules to govern the disclosure of users' location.

There are many semantic web-based systems that administer rules to protect users' privacy.

CoBrA, for example, demonstrated a context-aware design that enabled remote agents to communicate information.

CoBrA established an ontology that formed places made up of smart agents, devices, and sensors while also protecting the privacy of its users via rules that determine if they have the appropriate rights to share and/or receive data.

Another example is the Preserving Privacy in Context-aware Systems (PPCS) solution [81], which proposed a semantically rich, policy-based framework with several degrees of privacy to secure users' information in settings with mobile devices.

To create access control choices, dynamic information seen or inferred from the context was combined with static information about the owner.

Users' location and context information was shared (or not) in accordance with their privacy rules.

CoPS [82] is another concept that supports privacy regulations without requiring semantic web technology.

Users could decide who had access to their context data, when it was accessed, and at what degree of granularity in CoPS.

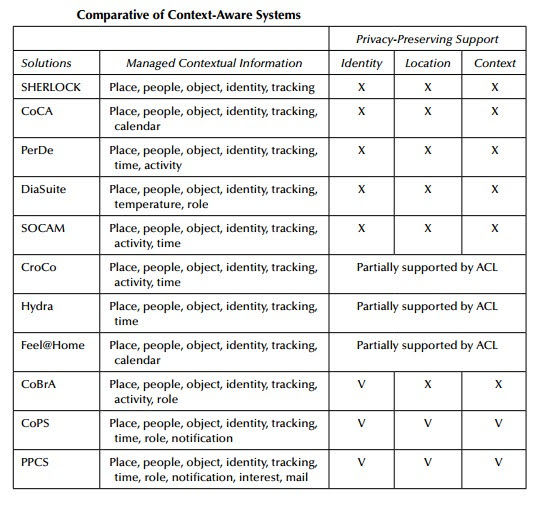

A comparison of the preceding solutions is shown in the Table below, taking into consideration the contextual information they handle and their privacy support.

SeCoMan [83] is a solution that obtains information from the context or environment in which users are placed, models and protects personal information, and provides context-aware applications, in addition to the preceding solutions and intended to safeguard users' privacy in context-aware situations.

SeCoMan offers a semantic web-oriented architecture.

On the one hand, information about users and situations is represented using an OWL 2-defined set of ontologies.

Users' information, on the other hand, is safeguarded by policies described in the Semantic Web Rule Language (SWRL).

These rules enable users to communicate their location with the individuals they want, at the granularity they want, at the proper time and place.

This paper also provides a comparison and analysis of several context-aware systems.

The Prophet architecture [84], on the other hand, offers an excellent security approach for enabling users to exchange their location data.

To explain the users' activity patterns, the authors of this proposal establish a Fingerprint identification based on Markov chains and state categorization.

Furthermore, they suggest a location-based anonymization system that uses an indistinguishability approach to secure users' sensitive information.

Several tests show that the suggested method performs well and is effective.

PRECISE [85] is another privacy-preserving and context-aware system that makes suggestions based on the information that users choose to divulge to certain services.

Users can release their locations to specific services, hide their positions from specific users, mask their locations from other users by generating fictitious (fake) positions, set the granularity and proximity at which they want to be located by services or users, and maintain their anonymity to specific services by using this solution.

In order to do this, an architecture based on the MCC paradigm was created.

This architecture is made up of services that are assigned at the MCC paradigm's Software as a Service (SaaS) layer and provide users with context-aware information suggestions.

The solution's major component is middleware deployed at the Platform as a Service (PaaS) layer, which maintains users' information and handles context and space information that might be given by independent systems.

ProtectMyPrivacy (PmP) [86] is an Android privacy protection solution that was conceived and deployed.

When privacy-sensitive data accesses occur, our concept may identify key contextual information at runtime.

Based on crowd-sourced data, PmP infers the purpose of data access.

The authors show that controlling sensitive data obtained by these libraries might be a useful tool for protecting users' privacy.

The following set of solutions focuses on safeguarding users' data while they travel between different settings or situations (intraand inter-context scenarios).

In this manner, CAPRIS [87] is a system that protects users' information regardless of their location.

Users were allowed to choose what, where, when, how, to whom, and with what degree of accuracy they wanted to share their information using CAPRIS in real time.

This information may include the space in which they are placed at various degrees of granularity, the users' personal information at various levels of accuracy, the users' activities, and information specific to the context in which they are positioned.

Users did not have to control their privacy while using CAPRIS; instead, they only had to choose the best suited set of rules given by the system.

MASTERY [88] proposes to users different sets of privacy-preserving and context-aware regulations, termed profiles, as an extension of CAPRIS, which has the same aims.

Users just need to pick the most appropriate profile based on their interests in the setting in which they are, and they may edit these profiles by adding, removing, or changing some of the rules that shape them.

Finally, when information is to be exchanged, the owner is notified in real time, and he or she chooses whether to allow or prohibit the information exchange.

Finally, h-MAS [89] is a privacy-preserving and context-aware solution for health situations that aims to manage user privacy in both intra- and inter-context scenarios.

In a health scenario, h-MAS recommends a set of privacy policies to users who are aware of their current health situation.

Users may make changes to the policies based on their preferences.

These rules safeguard users' health records, whereabouts, and context-aware data from being accessed by other parties without their permission.

Semantic web approaches, which offer a common architecture that makes it easier to describe, analyze, and communicate information across separate systems, are used to manage patient information and the health context.

In terms of network administration, controlling network resources at run-time and taking contextual information into account may result in significant benefits in terms of automated management, energy savings, and security.

In this regard, [90] proposes a mobility-aware, policy-based system for lowering energy usage in networks based on the SDN paradigm.

The rules proposed in this solution enable the SDN paradigm to turn on/off network resources that are inefficiently using energy, as well as to build virtualized network resources such as proxies to decrease network traffic created by users consuming services near to network infrastructure.

In line with energy consumption, user mobility, and network statistics, network managers design rules that will determine the list of probable actions to be made by SDN components.

This solution also includes an architecture for managing mobile network resources based on prior regulations and an ontology that represents the ideas associated with the mobile network subject.

The ontology provides a set of primitives for describing a collection of the resources controlled by the SDN paradigm, as well as their relationships.

Finally, in [91], a concept was made to maintain QoS and end-user experience in dynamic mobile network settings by taking contextual information into account.

Using high-level rules, this approach presents an architecture for managing SDN resources at run-time.

The authors highlight the usage of mobility-aware, management-oriented rules, which are developed by the service provider network administrator to determine the actions taken by the SDN based on network infrastructure data and location, as well as user and service mobility.

These regulations are designed to provide end-users with a positive experience in very congested areas (e.g., stadiums, shopping malls, or unexpected traffic jams).

To that end, the policies determine when the SDN should balance network traffic between infrastructure near the congested one, when the SDN should create or dismantle physical or virtual infrastructure if the congested one is insufficient to meet end-user demand, and when the SDN should restrict or limit specific services or network traffic in critical situations caused by large crowds using services in specific areas.

~ Jai Krishna Ponnappan

Find Jai on Twitter | LinkedIn | Instagram

You may also want to read and learn more Technology and Engineering here.

You may also want to read and learn more Cyber Security Systems here.

References & Further Reading:

1. OSI. Information Processing Systems-Open System Inteconnection-Systems Management Overview. ISO 10040, 1991.

2. Jefatura del Estado. Ley Orgánica de Protección de Datos de Carácter Personal. www.boe.es/boe/dias/1999/12/14/pdfs/A43088-43099.pdf.

3. D. W. Samuel, and D. B. Louis. The right to privacy. Harvard Law Review, 4(5): 193–220, 1890.

4. A. Westerinen, J. Schnizlein, J. Strassner, M. Scherling, B. Quinn, S. Herzog, A. Huynh, M. Carlson, J. Perry, and S. Waldbusser. Terminology for Policy-Based Management. IETF Request for Comments 3198, November 2001.

5. B. Moore. Policy Core Information Model (PCIM) Extensions. IETF Request for Comments 3460, January 2003.

6. S. Godik, and T. Moses. OASIS EXtensible Access Control Markup Language (XACML). OASIS Committee Specification, 2002.

7. A. Dardenne, A. Van Lamsweerde and S. Fickas. Goal-directed requirements acquisition. Science of Computer Programming, 20(1–2): 3–50, 1993.

8. F. L. Gandon, and N. M. Sadeh. Semantic web technologies to reconcile privacy and context awareness. Web Semantics: Science, Services and Agents on the World Wide Web, 1(3): 241–260, April 2004.

9. I. Horrocks. Ontologies and the semantic web. Communications ACM, 51(12): 58–67, December 2008.

10. R. Boutaba and I. Aib. Policy-based management: A historical perspective. Journal of Network and Systems Management, 15(4): 447–480, 2007.

11. P. A. Carter. Policy-Based Management, In Pro SQL Server Administration, pages 859–886. Apress, Berkeley, CA, 2015.

12. D. Florencio, and C. Herley. Where do security policies come from? In Proceedings of the 6th Symposium on Usable Privacy and Security, pages 10:1–10:14, 2010.

13. K. Yang, and X. Jia. DAC-MACS: Effective data access control for multi-authority Cloud storage systems, IEEE Transactions on Information Forensics and Security, 8(11): 1790–1801, 2014.

14. B. W. Lampson. Dynamic protection structures. In Proceedings of the Fall Joint Computer Conference, pages 27–38, 1969.

15. B. W. Lampson. Protection. ACM SIGOPS Operating Systems Review, 8(1): 18–24, January 1974.

16. D. E. Bell and L. J. LaPadula. Secure Computer Systems: Mathematical Foundations. Technical report, DTIC Document, 1973.

17. D. F. Ferraiolo, and D. R. Kuhn. Role-based access controls. In Proceedings of the 15th NIST-NCSC National Computer Security Conference, pages 554–563, 1992.

18. V. P. Astakhov. Surface integrity: Definition and importance in functional performance, In Surface Integrity in Machining, pages 1–35. Springer, London, 2010.

19. K. J. Biba. Integrity Considerations for Secure Computer Systems. Technical report, DTIC Document, 1977.

20. M. J. Culnan, and P. K. Armstrong. Information privacy concerns, procedural fairness, and impersonal trust: An empirical investigation. Organization Science, 10(1): 104–115, 1999.

21. A. I. Antón, E. Bertino, N. Li, and T. Yu. A roadmap for comprehensive online privacy policy management. Communications ACM, 50(7): 109–116, July 2007.

22. J. Karat, C. M. Karat, C. Brodie, and J. Feng. Privacy in information technology: Designing to enable privacy policy management in organizations. International Journal of Human Computer Studies, 63(1–2): 153–174, 2005.

23. M. Jafari, R. Safavi-Naini, P. W. L. Fong, and K. Barker. A framework for expressing and enforcing purpose-based privacy policies. ACM Transaction Information Systesms Security, 17(1): 3:1–3:31, August 2014.

24. G. Karjoth, M. Schunter, and M. Waidner. Platform for enterprise privacy practices: Privacy-enabled management of customer data, In Proceedings of the International Workshop on Privacy Enhancing Technologies, pages 69–84, 2003.

25. S. R. Blenner, M. Kollmer, A. J. Rouse, N. Daneshvar, C. Williams, and L. B. Andrews. Privacy policies of android diabetes apps and sharing of health information. JAMA, 315(10): 1051–1052, 2016.

26. R. Ramanath, F. Liu, N. Sadeh, and N. A. Smith. Unsupervised alignment of privacy policies using hidden Markov models. In Proceedings of the Annual Meeting of the Association of Computational Linguistics, pages 605–610, June 2014.

27. J. Gerlach, T. Widjaja, and P. Buxmann. Handle with care: How online social network providers’ privacy policies impact users’ information sharing behavior. The Journal of Strategic Information Systems, 24(1): 33–43, 2015.

28. O. Badve, B. B. Gupta, and S. Gupta. Reviewing the Security Features in Contemporary Security Policies and Models for Multiple Platforms. In Handbook of Research on Modern Cryptographic Solutions for Computer and Cyber Security, pages 479–504. IGI Global, Hershey, PA, 2016.

29. K. Zkik, G. Orhanou, and S. El Hajji. Secure mobile multi cloud architecture for authentication and data storage. International Journal of Cloud Applications and Computing 7(2): 62–76, 2017.

30. C. Stergiou, K. E. Psannis, B. Kim, and B. Gupta. Secure integration of IoT and cloud computing. In Future Generation Computer Systems, 78(3): 964–975, 2018.

31. D. C. Verma. Simplifying network administration using policy-based management. IEEE Network, 16(2): 20–26, March 2002.

32. D. C. Verma. Policy-Based Networking: Architecture and Algorithms. New Riders Publishing, Thousand Oaks, CA, 2000.

33. J. Rubio-Loyola, J. Serrat, M. Charalambides, P. Flegkas, and G. Pavlou. A methodological approach toward the refinement problem in policy-based management systems. IEEE Communications Magazine, 44(10): 60–68, October 2006.

34. F. Perich. Policy-based network management for next generation spectrum access control. In Proceedings of International Symposium on New Frontiers in Dynamic Spectrum Access Networks, pages 496–506, April 2007.

35. S. Shin, P. A. Porras, V. Yegneswaran, M. W. Fong, G. Gu, and M. Tyson. FRESCO: Modular composable security services for Software-Defined Networks. In Proceedings of the 20th Annual Network and Distributed System Security Symposium, pages 1–16, 2013.

36. K. Odagiri, S. Shimizu, N. Ishii, and M. Takizawa. Functional experiment of virtual policy based network management scheme in Cloud environment. In International Conference on Network-Based Information Systems, pages 208–214, September 2014.

37. M. Casado, M. J. Freedman, J. Pettit, J. Luo, N. McKeown, and S. Shenker. Ethane: Taking control of the enterprise. In Proceedings of Conference on Applications, Technologies, Architectures, and Protocols for Computer Communications, pages 1–12, August 2007.

38. M. Wichtlhuber, R. Reinecke, and D. Hausheer. An SDN-based CDN/ISP collaboration architecture for managing high-volume flows. IEEE Transactions on Network and Service Management, 12(1): 48–60, March 2015.

39. A. Lara, and B. Ramamurthy. OpenSec: Policy-based security using Software-Defined Networking. IEEE Transactions on Network and Service Management, 13(1): 30–42, March 2016.

40. W. Jingjin, Z. Yujing, M. Zukerman, and E. K. N. Yung. Energy-efficient base stations sleep-mode techniques in green cellular networks: A survey. IEEE Communications Surveys Tutorials, 17(2): 803–826, 2015.

41. G. Auer, V. Giannini, C. Desset, I. Godor, P. Skillermark, M. Olsson, M. A. Imran, D. Sabella, M. J. Gonzalez, O. Blume, and A. Fehske. How much energy is needed to run a wireless network?IEEE Wireless Communications, 18(5): 40–49, 2011.

42. W. Yun, J. Staudinger, and M. Miller. High efficiency linear GaAs MMIC amplifier for wireless base station and Femto cell applications. In IEEE Topical Conference on Power Amplifiers for Wireless and Radio Applications, pages 49–52, January 2012.

43. M. A. Marsan, L. Chiaraviglio, D. Ciullo, and M. Meo. Optimal energy savings in cellular access networks. In IEEE International Conference on Communications Workshops, pages 1–5, June 2009.

44. H. Claussen, I. Ashraf, and L. T. W. Ho. Dynamic idle mode procedures for femtocells. Bell Labs Technical Journal, 15(2): 95–116, 2010.

45. L. Rongpeng, Z. Zhifeng, C. Xianfu, J. Palicot, and Z. Honggang. TACT: A transfer actor-critic

learning framework for energy saving in cellular radio access networks. IEEE Transactions on Wireless Communications, 13(4): 2000–2011, 2014.

46. G. C. Januario, C. H. A. Costa, M. C. Amarai, A. C. Riekstin, T. C. M. B. Carvalho, and C. Meirosu. Evaluation of a policy-based network management system for energy-efficiency. In IFIP/IEEE International Symposium on Integrated Network Management, pages 596–602, May 2013.

47. C. Dsouza, G. J. Ahn, and M. Taguinod. Policy-driven security management for fog computing: Preliminary framework and a case study. In Conference on Information Reuse and Integration, pages 16–23, August 2014.

48. H. Kim and N. Feamster. Improving network management with Software Defined Networking. IEEE Communications Magazine, 51(2): 114–119, February 2013.

49. O. Gaddour, A. Koubaa, and M. Abid. Quality-of-service aware routing for static and mobile IPv6-based low-power and loss sensor networks using RPL. Ad Hoc Networks, 33: 233–256, 2015.

50. Q. Zhao, D. Grace, and T. Clarke. Transfer learning and cooperation management: Balancing the quality of service and information exchange overhead in cognitive radio networks. Transactions on Emerging Telecommunications Technologies, 26(2): 290–301, 2015.

51. M. Charalambides, P. Flegkas, G. Pavlou, A. K. Bandara, E. C. Lupu, A. Russo, N. Dulav, M. Sloman, and J. Rubio-Loyola. Policy conflict analysis for quality of service management. In Proceedings of the 6th IEEE International Workshop on Policies for Distributed Systems and Networks, pages 99–108, June 2005.

52. M. F. Bari, S. R. Chowdhury, R. Ahmed, and R. Boutaba. PolicyCop: An autonomic QoS policy enforcement framework for software defined networks. In 2013 IEEE SDN for Future Networks and Services, pages 1–7, November 2013.

53. C. Bennewith and R. Wickers. The mobile paradigm for content development, In Multimedia and E-Content Trends, pages 101–109. Vieweg+Teubner Verlag, 2009.

54. I. A. Junglas, and R. T. Watson. Location-based services. Communications ACM, 51(3): 65–69, March 2008.

55. M. Weiser. The computer for the 21st century. Scientific American, 265(3): 94–104, 1991.

56. G. D. Abowd, A. K. Dey, P. J. Brown, N. Davies, M. Smith, and P. Steggles. Towards a better understanding of context and context-awareness. In Handheld and Ubiquitous Computing, pages 304–307, September 1999.

57. B. Schilit, N. Adams, and R. Want. Context-aware computing applications. In Proceeding of the 1st Workshop Mobile Computing Systems and Applications, pages 85–90, December 1994.

58. N. Ryan, J. Pascoe, and D. Morse. Enhanced reality fieldwork: The context aware archaeological assistant. In Proceedings of the 25th Anniversary Computer Applications in Archaeology, pages 85–90, December 1997.

59. A. K. Dey. Context-aware computing: The CyberDesk project. In Proceedings of the AAAI 1998 Spring Symposium on Intelligent Environments, pages 51–54, 1998.

60. P. Prekop and M. Burnett. Activities, context and ubiquitous computing. Computer Communications, 26(11): 1168–1176, July 2003.

61. R. M. Gustavsen. Condor-an application framework for mobility-based context-aware applications. In Proceedings of the Workshop on Concepts and Models for Ubiquitous Computing, volume 39, September 2002.

62. C. Tadj and G. Ngantchaha. Context handling in a pervasive computing system framework. In

Proceedings of the 3rd International Conference on Mobile Technology, Applications and Systems,

pages 1–6, October 2006.

63. S. Dhar and U. Varshney. Challenges and business models for mobile location-based services and advertising. Communications ACM, 54(5): 121–128, May 2011.

64. F. Ricci, L. Rokach, and B. Shapira. Recommender Systems: Introduction and Challenges, pages In Recommender Systems Handbook, pages 1–34. Springer, Boston, MA, 2015.

65. J. B. Schafer, D. Frankowski, J. Herlocker, and S. Sen. Collaborative Filtering Recommender Systems, In The Adaptive Web, pages 291–324. Springer, Berlin, Heidelberg, 2007.

66. P. Lops, M. de Gemmis, and G. Semeraro. Content-Based Recommender Systems: State of the Art and Trends, In Recommender Systems Handbook, pages 73–105. Springer, Boston, MA, 2011.

67. D. Slamanig and C. Stingl. Privacy aspects of eHealth. In Proceedings of Conference on Availability, Reliability and Security, pages 1226–1233, March 2008.

68. C. Wang. Policy-based network management. In Proceedings of the International Conference on Communication Technology, volume 1, pages 101–105, 2000.

69. R. Want, A. Hopper, V. Falcao, and J. Gibbons. The active badge location system. ACM Transactions on Information Systems, 10(1): 91–102, January 1992.

70. K. R. Wood, T. Richardson, F. Bennett, A. Harter, and A. Hopper. Global teleporting with Java: Toward ubiquitous personalized computing. Computer, 30(2): 53–59, February 1997.

71. C. Perera, A. Zaslavsky, P. Christen, and D. Georgakopoulos. Context aware computing for the Internet of Things: A survey. IEEE Communications Surveys Tutorials, 16(1): 414–454, 2014.

72. B. Guo, L. Sun, and D. Zhang. The architecture design of a cross-domain context management system. In Proceedings of Conference Pervasive Computing and Communications Workshops, pages 499–504, April 2010.

73. A. Badii, M. Crouch, and C. Lallah. A context-awareness framework for intelligent networked embedded systems. In Proceedings of Conference on Advances in Human-Oriented and Personalized Mechanisms, Technologies and Services, pages 105–110, August 2010.

74. S. Pietschmann, A. Mitschick, R. Winkler, and K. Meissner. CroCo: Ontology-based, crossapplication context management. In Proceedings of Workshop on Semantic Media Adaptation and Personalization, pages 88–93, December 2008.

75. T. Gu, X. H. Wang, H. K. Pung, and D. Q. Zhang. An ontology-based context model in intelligent environments. In Proceedings of Communication Networks and Distributed Systems Modeling and Simulation Conference, pages 270–275, January 2004.

76. H. Chen, T. Finin, and A. Joshi. An ontology for context-aware pervasive computing environments. The Knowledge Engineering Review, 18(03): 197–207, September 2003.

77. D. Ejigu, M. Scuturici, and L. Brunie. CoCA: A collaborative context-aware service platform for pervasive computing. In Proceedings of Conference Information Technologies, pages 297–302, April 2007.

78. R. Yus, E. Mena, S. Ilarri, and A. Illarramendi. SHERLOCK: Semantic management of location based services in wireless environments. Pervasive and Mobile Computing, 15: 87–99, 2014.

79. L. Tang, Z. Yu, H. Wang, X. Zhou, and Z. Duan. Methodology and tools for pervasive application development. International Journal of Distributed Sensor Networks, 10(4): 1–16, 2014.

80. B. Bertran, J. Bruneau, D. Cassou, N. Loriant, E. Balland, and C. Consel. DiaSuite: A tool suite to develop sense/compute/control applications. Science of Computer Programming, 79: 39–51, 2014.

81. P. Jagtap, A. Joshi, T. Finin, and L. Zavala. Preserving privacy in context-aware systems. In Proceedings of Conference on Semantic Computing, pages 149–153, September 2011.

82. V. Sacramento, M. Endler, and F. N. Nascimento. A privacy service for context-aware mobile computing. In Proceedings of Conference on Security and Privacy for Emergency Areas in Communication Networks, pages 182–193, September 2005.

83. A. Huertas Celdrán, F. J. García Clemente, M. Gil Pérez, and G. Martínez Pérez. SeCoMan: A

semantic-aware policy framework for developing privacy-preserving and context-aware smart applications. IEEE Systems Journal, 10(3): 1111–1124, September 2016.

84. J. Qu, G. Zhang, and Z. Fang. Prophet: A context-aware location privacy-preserving scheme in location sharing service. Discrete Dynamics in Nature and Society, 2017, 1–11, Article ID 6814832, 2017.

85. A. Huertas Celdrán, M. Gil Pérez, F. J. García Clemente, and G. Martínez Pérez. PRECISE: Privacy-aware recommender based on context information for Cloud service environments. IEEE Communications Magazine, 52(8): 90–96, August 2014.

86. S. Chitkara, N. Gothoskar, S. Harish, J.I. Hong, and Y. Agarwal. Does this app really need my location? Context-aware privacy management for smartphones. In Proceedings of the ACM Interactive Mobile, Wearable and Ubiquitous Technologies, 1(3): 42:1–42:22, September 2017.

87. A. Huertas Celdrán, M. Gil Pérez, F. J. García Clemente, and G. Martínez Pérez. What private information are you disclosing? A privacy-preserving system supervised by yourself. In Proceedings of the 6th International Symposium on Cyberspace Safety and Security, pages 1221–1228, August 2014.

88. A. Huertas Celdrán, M. Gil Pérez, F. J. García Clemente, and G. Martínez Pérez. MASTERY: A multicontext-aware system that preserves the users’ privacy. In IEEE/IFIP Network Operations and Management Symposium, pages 523–528, April 2016.

89. A. Huertas Celdrán, M. Gil Pérez, F. J. García Clemente, and G. Martínez Pérez. Preserving patients’ privacy in health scenarios through a multicontext-aware system. Annals of Telecommunications, 72(9–10): 577–587, October 2017.

90. A. Huertas Celdrán, M. Gil Pérez, F. J. García Clemente, and G. Martínez Pérez. Policy-based management for green mobile networks through software-defined networking. Mobile Networks and Applications, In Press, 2016.

91. A. Huertas Celdrán, M. Gil Pérez, F. J. García Clemente, and G. Martínez Pérez. Enabling highly dynamic mobile scenarios with software defined networking. IEEE Communications Magazine, Feature Topics Issue on SDN Use Cases for Service Provider Networks, 55(4): 108–113, April 2017.