The emergence of technologies that could fundamentally

change humans' role in society, challenge human epistemic agency and

ontological status, and trigger unprecedented and unforeseen developments in

all aspects of life, whether biological, social, cultural, or technological, is

referred to as the Technological Singularity.

The Singularity of Technology is most often connected with

artificial intelligence, particularly artificial general intelligence (AGI).

As a result, it's frequently depicted as an intelligence

explosion that's pushing advancements in fields like biotechnology,

nanotechnology, and information technologies, as well as inventing new

innovations.

The Technological Singularity is sometimes referred to as

the Singularity, however it should not be confused with a mathematical

singularity, since it has only a passing similarity.

This singularity, on the other hand, is a loosely defined

term that may be interpreted in a variety of ways, each highlighting distinct

elements of the technological advances.

The thoughts and writings of John von Neumann (1903–1957),

Irving John Good (1916–2009), and Vernor Vinge (1944–) are commonly connected

with the Technological Singularity notion, which dates back to the second half

of the twentieth century.

Several universities, as well as governmental and corporate

research institutes, have financed current Technological Singularity research

in order to better understand the future of technology and society.

Despite the fact that it is the topic of profound

philosophical and technical arguments, the Technological Singularity remains a

hypothesis, a guess, and a pretty open hypothetical idea.

While numerous scholars think that the Technological

Singularity is unavoidable, the date of its occurrence is continuously pushed

back.

Nonetheless, many studies agree that the issue is not

whether or whether the Technological Singularity will occur, but rather when

and how it will occur.

Ray Kurzweil proposed a more exact timeline for the

emergence of the Technological Singularity in the mid-twentieth century.

Others have sought to give a date to this event, but there

are no well-founded grounds in support of any such proposal.

Furthermore, without applicable measures or signs, mankind

would have no way of knowing when the Technological Singularity has occurred.

The history of artificial intelligence's unmet promises

exemplifies the dangers of attempting to predict the future of technology.

The themes of superintelligence, acceleration, and

discontinuity are often used to describe the Technological Singularity.

The term "superintelligence" refers to a

quantitative jump in artificial systems' cognitive abilities, putting them much

beyond the capabilities of typical human cognition (as measured by standard IQ

tests).

Superintelligence, on the other hand, may not be restricted

to AI and computer technology.

Through genetic engineering, biological computing systems,

or hybrid artificial–natural systems, it may manifest in human agents.

Superintelligence, according to some academics, has

boundless intellectual capabilities.

The curvature of the time curve for the advent of certain

key events is referred to as acceleration.

Stone tools, the pottery wheel, the steam engine,

electricity, atomic power, computers, and the internet are all examples of

technological advancement portrayed as a curve across time emphasizing the

discovery of major innovations.

Moore's law, which is more precisely an observation that has

been viewed as a law, represents the increase in computer capacity.

"Every two years, the number of transistors in a dense

integrated circuit doubles," it says.

People think that the emergence of key technical advances and

new technological and scientific paradigms will follow a super-exponential

curve in the event of the Technological Singularity.

One prediction regarding the Technological Singularity, for

example, is that superintelligent systems would be able to self-improve (and

self-replicate) in previously unimaginable ways at an unprecedented pace,

pushing the technological development curve far beyond what has ever been

witnessed.

The Technological Singularity discontinuity is referred to

as an event horizon, and it is similar to a physical idea linked with black

holes.

The analogy to this physical phenomena, on the other hand,

should be used with care rather than being used to credit the physical world's

regularity and predictability to technological singularity.

The limit of our knowledge about physical occurrences beyond

a specific point in time is defined by an event horizon (also known as a

prediction horizon).

It signifies that there is no way of knowing what will

happen beyond the event horizon.

The discontinuity or event horizon in the context of

technological singularity suggests that the technologies that precipitate

technological singularity would cause disruptive changes in all areas of human

life, developments about which experts cannot even conjecture.

The end of humanity and the end of human civilization are

often related with technological singularity.

According to some research, social order will collapse,

people will cease to be major actors, and epistemic agency and primacy would

be lost.

Humans, it seems, will not be required by superintelligent

systems.

These systems will be able to self-replicate, develop, and

build their own living places, and humans will be seen as either barriers or

unimportant, outdated things, similar to how humans now consider lesser

species.

One such situation is represented by Nick Bostrom's

Paperclip Maximizer.

AI is included as a possible danger to humanity's existence

in the Global Catastrophic Risks Survey, with a reasonably high likelihood of

human extinction, placing it on par with global pandemics, nuclear war, and

global nanotech catastrophes.

However, the AI-related apocalyptic scenario is not a

foregone conclusion of the Technological Singularity.

In other more utopian scenarios, technology singularity

would usher in a new period of endless bliss by opening up new opportunities

for humanity's infinite expansion.

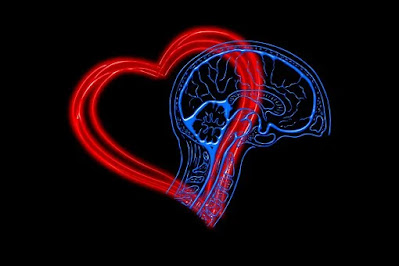

Another element of technological singularity that requires

serious consideration is how the arrival of superintelligence may imply the

emergence of superethical capabilities in an all-knowing ethical agent.

Nobody knows, however, what superethical abilities might

entail.

The fundamental problem, however, is that superintelligent

entities' higher intellectual abilities do not ensure a high degree of ethical

probity, or even any level of ethical probity.

As a result, having a superintelligent machine with almost

infinite (but not quite) capacities but no ethics seems to be dangerous to say

the least.

A sizable number of scholars are skeptical about the

development of the Technological Singularity, notably of superintelligence.

They rule out the possibility of developing artificial

systems with superhuman cognitive abilities, either on philosophical or

scientific grounds.

Some contend that while artificial intelligence is often at

the heart of technological singularity claims, achieving human-level

intelligence in artificial systems is impossible, and hence superintelligence,

and thus the Technological Singularity, is a dream.

Such barriers, however, do not exclude the development of

superhuman brains via the genetic modification of regular people, paving the

door for transhumans, human-machine hybrids, and superhuman agents.

More scholars question the validity of the notion of the

Technological Singularity, pointing out that such forecasts about future civilizations

are based on speculation and guesswork.

Others argue that the promises of unrestrained technological

advancement and limitless intellectual capacities made by the Technological

Singularity legend are unfounded, since physical and informational processing

resources are plainly limited in the cosmos, particularly on Earth.

Any promises of self-replicating, self-improving artificial

agents capable of super-exponential technological advancement are false, since

such systems will lack the creativity, will, and incentive to drive their own

evolution.

Meanwhile, social opponents point out that

superintelligence's boundless technological advancement would not alleviate

issues like overpopulation, environmental degradation, poverty, and

unparalleled inequality.

Indeed, the widespread unemployment projected as a

consequence of AI-assisted mass automation of labor, barring significant

segments of the population from contributing to society, would result in

unparalleled social upheaval, delaying the development of new technologies.

As a result, rather than speeding up, political or societal

pressures will stifle technological advancement.

While technological singularity cannot be ruled out on

logical grounds, the technical hurdles that it faces, even if limited to those

that can presently be determined, are considerable.

Nobody expects the technological singularity to happen with

today's computers and other technology, but proponents of the concept consider

these obstacles as "technical challenges to be overcome" rather than

possible show-stoppers.

However, there is a large list of technological issues to be

overcome, and Murray Shanahan's The Technological Singularity (2015) gives a

fair overview of some of them.

There are also some significant nontechnical issues, such as

the problem of superintelligent system training, the ontology of artificial or

machine consciousness and self-aware artificial systems, the embodiment of

artificial minds or vicarious embodiment processes, and the rights granted to

superintelligent systems, as well as their role in society and any limitations

placed on their actions, if this is even possible.

These issues are currently confined to the realms of

technological and philosophical discussion.

~ Jai Krishna Ponnappan

Find Jai on Twitter | LinkedIn | Instagram

You may also want to read more about Artificial Intelligence here.

See also:

Bostrom, Nick; de Garis, Hugo; Diamandis, Peter; Digital Immortality; Goertzel, Ben; Kurzweil, Ray; Moravec, Hans; Post-Scarcity, AI and; Superintelligence.

References And Further Reading

Bostrom, Nick. 2014. Superintelligence: Path, Dangers, Strategies. Oxford, UK: Oxford University Press.

Chalmers, David. 2010. “The Singularity: A Philosophical Analysis.” Journal of Consciousness Studies 17: 7–65.

Eden, Amnon H. 2016. The Singularity Controversy. Sapience Project. Technical Report STR 2016-1. January 2016.

Eden, Amnon H., Eric Steinhart, David Pearce, and James H. Moor. 2012. “Singularity Hypotheses: An Overview.” In Singularity Hypotheses: A Scientific and Philosophical Assessment, edited by Amnon H. Eden, James H. Moor, Johnny H. Søraker, and Eric Steinhart, 1–12. Heidelberg, Germany: Springer.

Good, I. J. 1966. “Speculations Concerning the First Ultraintelligent Machine.” Advances in Computers 6: 31–88.

Kurzweil, Ray. 2005. The Singularity Is Near: When Humans Transcend Biology. New York: Viking.

Sandberg, Anders, and Nick Bostrom. 2008. Global Catastrophic Risks Survey. Technical Report #2008/1. Oxford University, Future of Humanity Institute.

Shanahan, Murray. 2015. The Technological Singularity. Cambridge, MA: The MIT Press.

Ulam, Stanislaw. 1958. “Tribute to John von Neumann.” Bulletin of the American Mathematical Society 64, no. 3, pt. 2 (May): 1–49.

Vinge, Vernor. 1993. “The Coming Technological Singularity: How to Survive in the Post-Human Era.” In Vision 21: Interdisciplinary Science and Engineering in the Era of Cyberspace, 11–22. Cleveland, OH: NASA Lewis Research Center.