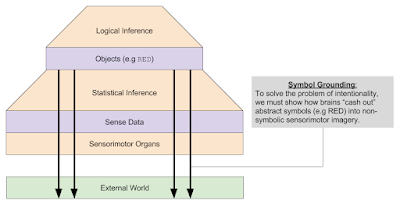

The symbol grounding problem shows how entities might be linked to their physical counterparts.

To put it another way, the symbol grounding issue is

concerned with how ideas inside an intelligent agent's representation might be

linked to their referents in the real world.

Stevan Harnad (1990) likened the situation to a symbol/

symbol merry-go-round, in which one symbol is grounded by another meaningless

symbol.

A cognitive model is a representation of the mind in symbols.

To construct artificial cognitive systems, several types of

representations are required.

There are two types of semantics in a mental representation:

- lexical (or semantic system) and

- compositional (or connectionist system) semantics.

- The lexical semantic is the meaning of a single word,

whereas the compositional semantic is the meaning of a set of words in relation

to one another.

- The compositional semantic is studied in traditional

cognitive research.

Cognitive architectures are hybrid systems that pick the

matched knowledge piece using lexical and compositional representations of

knowledge (or chunks) and pattern matching.

Architecture's sensory input is chunks.

For all hybrid systems, tying pieces to the outside world

has not been described.

As a result, the symbol grounding issue prevents artificial

cognitive systems from reaching their full cognitive potential, particularly in

terms of perception and motor abilities.

A new sort of memory, dubbed visual patterns, has been

created to overcome the symbol grounding issue for cognitive models.

- Visual patterns are visual representations of items in the actual world that are linked to knowledge aspects (chunks).

- Cognitive models can communicate with one other via a more accurate representation of vision, assisting in the solution of the symbol grounding issue.

Find Jai on Twitter | LinkedIn | Instagram

You may also want to read more about Artificial Intelligence here.

See also:

References And Further Reading

Harnad, Stevan. 1990. “The Symbol Grounding Problem.” Physica D: Nonlinear Phenomena 42, no. 1–3 (June): 335–46.

Ritter, Frank E., Farnaz Tehranchi, and Jacob D. Oury. 2018. “ACT-R: A Cognitive Architecture for Modeling Cognition.” Wiley Interdisciplinary Reviews: Cognitive Science 10, no. 4, 1–19.

Tehranchi, Farnaz, and Frank E. Ritter. 2018. “Modeling Visual Search in Interactive Graphic Interfaces: Adding Visual Pattern Matching Algorithms to ACT-R.” In Proceedings of the 16th International Conference on Cognitive Modeling, 162–67. Madison: University of Wisconsin.