A composer's approach for producing new

musical material by following a preset limited set of rules or procedures is

known as algorithmic composition.

In place of normal musical notation, the algorithm might

instead be a set of instructions defined by the composer for the performer to

follow throughout a performance.

According to one school of thinking, algorithmic composition

should include as little human intervention as possible.

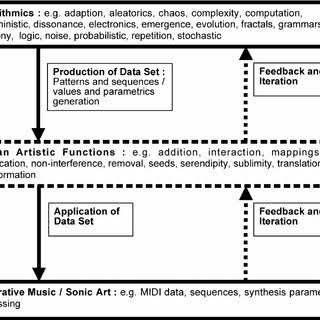

In music, AI systems based on generative grammar, knowledge-based

systems, genetic algorithms, and, more recently, deep learning-trained

artificial neural networks have all been used.

The employment of algorithms to assist in the development of

music is far from novel.

Several thousand-year-old music theory treatises provide

early examples.

These treatises compiled lists of common-practice rules and

conventions that composers followed in order to write music correctly.

Johann Joseph Fux's Gradus ad Parnassum (1725), which

describes the precise rules defining species counter point, is an early example

of algorithmic composition.

Species counterpoint presented five techniques of composing

complimentary musical harmony lines against the primary or fixed melody, which

was meant as an instructional tool.

Fux's technique gives limited flexibility from the specified

rules if followed to the letter.

Chance was often used in early instances of algorithmic

composition with little human intervention.

Chance music, often known as aleatoric music, dates back to

the Renaissance.

Mozart is credited with the most renowned early example of

the technique.

The usage of "Musikalisches Würfelspiel" (musical

dice game) is included in a published manuscript claimed to Mozart dated 1787.

In order to put together a 16-bar waltz, the performer must

roll the dice to choose one-bar parts of precomposed music (out of a possible

176) at random.

In the musical dice game, chance is only allowed to affect

the sequence of brief pre-composed musical snippets, but in his 1951 work Music

of Changes, chance is allowed to govern almost all choices.

To decide all musical judgments, Cage consulted the ancient

Chinese divi nation scripture I Ching (The Book of Changes).

For playability considerations, his friend David Tudor, the

work's performer, had to convert his highly explicit and intricate score into

something closer to conventional notation.

This demo shows two types of aleatoric music: one in which

the composer uses random processes to generate a set score, and the other in

which the sequence of the musical pieces is left to the performer or chance.

Arnold Schoenberg created a twelve-tone algorithmic

composition process that is closely related to fields of mathematics like

combinatorics and group theory.

Twelve-tone composition is an early form of serialism in

which each of the twelve tones of traditional western music is given equal

weight.

After placing each tone in a chosen row with no repeated

pitches, the row is rotated by one at a time until a 12 12 matrix is formed.

The matrix contains all variants of the original tone row

that the composer may use for pitch material.

Instead of writing melodic lines, the rows may be further

separated into subsets to provide harmonic content (a vertical collection of

sounds) (horizontal setting).

Later composers like Pierre Boulez and Karlheinz Stockhausen

experimented with serializing additional musical aspects by building matrices

that included dynamics and timbre.

Some algorithmic composing approaches were created in

response to serialist composers' rejection or modification of previous

techniques.

Serialist composers, according to Iannis Xena kis, were

excessively concentrated on harmony as a succession of interconnecting linear

objects (the establishment of linear tone-rows), and the procedures grew too

difficult for the listener to understand.

He presented new ways to adapt nonmusical algorithms for

music creation that might work with dense sound masses.

The strategy, according to Xenakis, liberated music from its

linear concerns.

He was motivated by scientific studies of natural and social

events such as moving particles in a cloud or thousands of people assembled at

a political rally, and he focused his compositions on the application of

probability theory and stochastic processes.

Xenakis, for example, used Markov chains to manipulate

musical elements like pitch, timbre, and dynamics to gradually build

thick-textured sound masses over time.

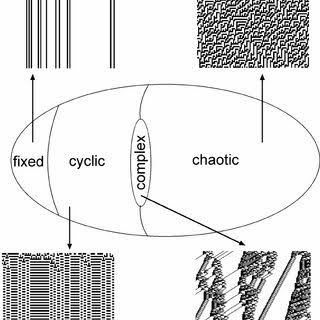

The likelihood of the next happening event is largely

influenced by previous occurrences in a Markov chain; hence, his use of

algorithms mixed indeterminate aspects like those in Cage's chance music with

deterministic elements like serialism.

This song was dubbed stochastic music by him.

It prompted a new generation of composers to incorporate

more complicated algorithms into their work.

Calculations for these composers ultimately necessitated the

use of computers.

Xenakis was a forerunner in the use of computers in music,

using them to assist in the calculation of the outcomes of his stochastic and

probabilistic procedures.

With his album Ambient 1: Music for Airports, Brian Eno

popularized ambient music by building on composer Erik Satie's notion of

background music involving live performers (known as furniture music) (1978).

The lengths of seven tape recorders, each of which held a

distinct pitch, were all different.

With each loop, the pitches were in a new sequence, creating

a melody that was always shifting.

The composition always develops in the same manner each time

it is performed since the inputs are the same.

Ambient and generative music are both forerunners of

autonomous computer-based algorithmic creation, most of which now uses

artificial intelligence techniques.

Noam Chomsky and his collaborators invented generative

grammar, which is a set of principles for describing natural languages.

The rules define a range of potential serial orderings of

items by rewriting hierarchically structured elements.

Generative grammars, which have been adapted for algorithmic

composition, may be used to generate musical sections.

Experiments in Musical Intelligence (1996) by David Cope is

possibly the best-known use of generative grammar.

Cope taught his program to produce music in the styles of a

variety of composers, including Bach, Mozart, and Chopin.

Information about the genre of music the composer desires to

replicate is encoded as a database of facts that may be used to develop an

artificial expert to aid the composer in knowledge-based systems.

Genetic algorithms are a kind of composition that mimics the

process of biological evolution.

The similarity of a population of randomly made compositions

to the intended musical output is examined.

Then, based on natural causes, artificial methods are

applied to improve the likelihood of musically attractive qualities increasing

in following generations.

The composer interacts with the system, stimulating new

ideas in both the computer and the spectator.

Deep learning systems like generative adversarial networks,

or GANs, are used in more contemporary AI-generated composition methodologies.

In music, generative adversarial networks pit a

generator—which makes new music based on compositional style knowledge—against

a discriminator, which tries to tell the difference between the generator's

output and that of a human composer.

When the generator fails, the discriminator gets more

information until it can no longer distinguish between genuine and created

musical content.

Music is rapidly being driven in new and fascinating ways by

the repurposing of non-musical algorithms for musical purposes.

You may also want to read more about Artificial Intelligence here.

See also:

Computational Creativity.

Further Reading:

Cope, David. 1996. Experiments in Musical Intelligence. Madison, WI: A-R Editions.

Eigenfeldt, Arne. 2011. “Towards a Generative Electronica: A Progress Report.” eContact! 14, no. 4: n.p. https://econtact.ca/14_4/index.html.

Eno, Brian. 1996. “Evolving Metaphors, in My Opinion, Is What Artists Do.” In Motion Magazine, June 8, 1996. https://inmotionmagazine.com/eno1.html.

Nierhaus, Gerhard. 2009. Algorithmic Composition: Paradigms of Automated Music Generation. New York: Springer.

Parviainen, Tero. “How Generative Music Works: A Perspective.” http://teropa.info/loop/#/title.