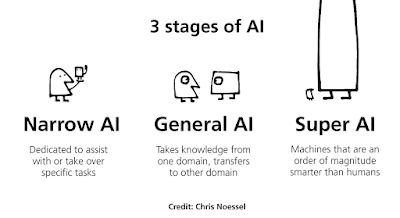

There are two types

of artificial intelligence: general (or powerful or complete) and narrow (or

limited) (or weak or specialized).

In the actual world, general AI, such as that seen in

science fiction, does not yet exist.

Machines with global intelligence would be capable of

completing every intellectual endeavor that humans are capable of.

This sort of system would also seem to think in abstract

terms, establish connections, and communicate innovative ideas in the same

manner that people do, displaying the ability to think abstractly and solve

problems.

Such a computer would be capable of thinking, planning, and

recalling information from the past.

While the aim of general AI has yet to be achieved, there

are more and more instances of narrow AI.

These are machines that perform at human (or even

superhuman) levels on certain tasks.

Computers that have learnt to play complicated games have

abilities, techniques, and behaviors that are comparable to, if not superior

to, those of the most skilled human players.

AI systems that can translate between languages in real

time, interpret and respond to natural speech (both spoken and written), and

recognize images have also been developed (being able to recognize, identify,

and sort photos or images based on the content).

However, the ability to generalize knowledge or skills is

still largely a human accomplishment.

Nonetheless, there is a lot of work being done in the field

of general AI right now.

It will be difficult to determine when a computer develops

human-level intelligence.

Several serious and hilarious tests have been suggested to

determine whether a computer has reached the level of General AI.

The Turing Test is arguably the most renowned of these

examinations.

A machine and a person speak in the background, as another

human listens in.

The human eavesdropper must figure out which speaker is a

machine and which is a human.

The machine passes the test if it can fool the human

evaluator a prescribed percentage of the time.

The Coffee Test is a more fantastical test in which a

machine enters a typical household and brews coffee.

It has to find the coffee machine, look for the coffee, add

water, boil the coffee, and pour it into a cup.

Another is the Flat Pack Furniture Test, which involves a machine

receiving, unpacking, and assembling a piece of furniture based only on the

instructions supplied.

Some scientists, as well as many science fiction writers and

fans, believe that once intelligent machines reach a tipping point, they will

be able to improve exponentially.

AI-based beings that far exceed human capabilities might be

one conceivable result.

The Singularity, or artificial superintelligence, is the

point at which AI assumes control of its own self-improvement (ASI).

If ASI is achieved, it will have unforeseeable consequences

for human society.

Some pundits worry that ASI would jeopardize humanity's

safety and dignity.

It's up for dispute whether the Singularity will ever

happen, and how dangerous it may be.

Narrow AI applications are becoming more popular across the

globe.

Machine learning (ML) is at the heart of most new

applications, and most AI examples in the news are connected to this subset of

technology.

Traditional or conventional algorithms are not the same as

machine learning programs.

In programs that cannot learn, a computer programmer

actively adds code to account for every action of an algorithm.

All of the decisions made along the process are governed by

the programmer's guidelines.

This necessitates the programmer imagining and coding for

every possible circumstance that an algorithm may face.

This kind of program code is bulky and often inadequate,

especially if it is updated frequently to accommodate for new or unanticipated

scenarios.

The utility of hard-coded algorithms approaches its limit in

cases where the criteria for optimum judgments are unclear or impossible for a

human programmer to foresee.

Machine learning is the process of training a computer to

detect and identify patterns via examples rather than predefined rules.

This is achieved, according to Google engineer Jason Mayes,

by reviewing incredibly huge quantities of training data or participating in

some other kind of programmed learning step.

New patterns may be extracted by processing the test data.

The system may then classify newly unknown data based on the

patterns it has already found.

Machine learning allows an algorithm to recognize patterns

or rules underlying decision-making processes on its own.

Machine learning also allows a system's output to improve

over time as it gains more experience (Mayes 2017).

A human programmer continues to play a vital role in this

learning process, influencing results by making choices like developing the

exact learning algorithm, selecting the training data, and choosing other

design elements and settings.

Machine learning is powerful once it's up and running

because it can adapt and enhance its ability to categorize new data without the

need for direct human interaction.

In other words, the output quality increases as the user

gains experience.

Artificial intelligence is a broad word that refers to the

science of making computers intelligent.

AI is a computer system that can collect data and utilize it

to make judgments or solve issues, according to scientists.

Another popular scientific definition of AI is "a

software program paired with hardware that can receive (or sense) inputs from

the world around it, evaluate and analyze those inputs, and create outputs and

suggestions without the assistance of a person." When programmers claim an

AI system can learn, they're referring to the program's ability to change its

own processes in order to provide more accurate outputs or predictions.

AI-based systems are now being developed and used in

practically every industry, from agriculture to space exploration, and in

applications ranging from law enforcement to online banking.

The methods and techniques used in computer science are

always evolving, extending, and improving.

Other terminology linked to machine learning, such as

reinforcement learning and neural networks, are important components of

cutting-edge artificial intelligence systems.

You may also want to read more about Artificial Intelligence here.

See also:

Embodiment, AI and; Superintelligence; Turing, Alan; Turing Test.

Further Reading:

Kelnar, David. 2016. “The Fourth Industrial Revolution: A Primer on Artificial Intelligence (AI).” Medium, December 2, 2016. https://medium.com/mmc-writes/the-fourth-industrial-revolution-a-primer-on-artificial-intelligence-ai-ff5e7fffcae1.

Kurzweil, Ray. 2005. The Singularity Is Near: When Humans Transcend Biology. New York: Viking.

Mayes, Jason. 2017. Machine Learning 101. https://docs.google.com/presentation/d/1kSuQyW5DTnkVaZEjGYCkfOxvzCqGEFzWBy4e9Uedd9k/htmlpresent.

Müller, Vincent C., and Nick Bostrom. 2016. “Future Progress in Artificial Intelligence: A Survey of Expert Opinion.” In Fundamental Issues of Artificial Intelligence, edited by Vincent C. Müller, 553–71. New York: Springer.

Russell, Stuart, and Peter Norvig. 2003. Artificial Intelligence: A Modern Approach. Englewood Cliffs, NJ: Prentice Hall.

Samuel, Arthur L. 1988. “Some Studies in Machine Learning Using the Game of Checkers I.” In Computer Games I, 335–65. New York: Springer.