What is a Quantum Processing Unit (QPU)?

Despite its widespread use, the phrase "quantum computer" may be misleading.

It conjures up thoughts of a whole new and alien kind of computer, one that replaces all current computing software with a future alternative.

- This is a widespread, though massive, misunderstanding at the time of writing.

- The potential of quantum computers comes from its capacity to significantly expand the types of problems that are tractable inside computing, rather than being a traditional computer killer.

- There are significant computational problems that a quantum computer can readily solve, but that would be impossible to solve on any conventional computing device we could ever hope to construct.

But, importantly, these sorts of speedups have only been observed for a few issues, and although more are expected to be discovered, it's very doubtful that doing all calculations on a quantum computer would ever make sense.

For the vast majority of activities that use your laptop's clock cycles, a quantum computer is no better.

In other words, a quantum computer is actually a co-processor from the standpoint of the programmer.

- Previously, computers utilized a variety of coprocessors, each with its own set of capabilities, such as floating-point arithmetic, signal processing, and real-time graphics.

- With this in mind, we'll refer to the device on which our code samples run as a QPU (Quantum Processing Unit).

This, we believe, emphasizes the critical context in which quantum computing should be considered.

A quantum processing unit (QPU), sometimes known as a quantum chip, is a physical (fabricated) device with a network of linked qubits.

- It's the cornerstone of a complete quantum computer, which also comprises the QPU's housing environment, control circuits, and a slew of other components.

Programming for a QPU

Like other co-processors like the GPU (Graphics Processing Unit), QPU programming entails creating code that will mainly execute on a regular computer's CPU (Central Processing Unit).

- The CPU only sends QPU coprocessor instructions to start tasks that are appropriate for its capabilities.

- Fortunately (and excitingly), a few prototype QPUs are already accessible and may be accessed through the cloud as of this writing.

- Furthermore, conventional computer gear may be used to mimic the behavior of a QPU for simpler tasks.

Although emulating bigger QPU programs is impractical, it is a handy method to learn how to operate a real QPU for smaller code snippets.

- Even when more complex QPUs become available, the fundamental QPU code examples will remain both useful and instructive.

- There are a plethora of QPU simulators, libraries, and systems to choose from.

Quantum Processing Units (QPU) Make Quantum Computing Possible.

A quantum processing unit (QPU) is a physical or virtual processor with a large number of linked qubits that may be used to calculate quantum algorithms.

- A quantum computer or quantum simulator would not be complete without it.

- Quantum devices are still in their infancy, and not all of them are capable of running all Q# programs.

- As a result, while creating programs for various targets, you must keep certain constraints in mind.

- Quantum mechanics, the study of atomic structure and function, is used to create a computer architecture.

Quantum computing is a world apart from traditional computing ("classical computing").

- It can only answer a limited number of issues, all of which are based on mathematics and expressed as equations.

- Quantum computer processing imitates nature at the atomic level, and one of its most promising applications is the investigation of molecule interactions in order to unravel nature's secrets.

At Oxford University and IBM's Almaden Research Center in 1998, the first quantum computers were demonstrated.

- There were around a hundred functional quantum computers across the globe by 2020.

- Due to the exorbitant expense of creating and maintaining quantum computers, quantum computing will most likely be delivered as a cloud service rather than as hardware for enterprises to purchase. We'll have to wait and see.

Quantum coprocessor and quantum cloud are two terms for the same thing.

Because data rise at such a rapid rate, even the fastest supercomputers face a slew of issues.

- Consider the classic traveling salesman dilemma, which entails determining the most cost-effective round journey between locations.

- The first stage is to calculate all feasible routes, which yields a 63-digit number if the journey involves 50 cities.

- Whereas traditional computers may take days or even months to tackle similar issues, quantum computers are projected to respond in seconds or minutes.

- Quantum teleportation, binary values, rice, and the chessboard legend are all examples of quantum supremacy.

Superposition and Entanglement of Qubits.

Quantum computing relies on the "qubit," or quantum bit, which is made up of one or more electrons and may be designed in a variety of ways.

- The situation that permits a qubit to be in several states at the same time is known as quantum superposition (see qubit).

- Entanglement is a trait that enables one particle to communicate with another across a long distance.

- The two major kinds of quantum computer designs are gate model and quantum annealing.

Gate Model QC

"Quality Control Model" :

Quantum computers based on the gate model have gates that are similar in principle to classical computers but have significantly different logic and design.

- Google, IBM, Intel, and Rigetti are among the businesses working on gate model machines, each with its own qubit architecture.

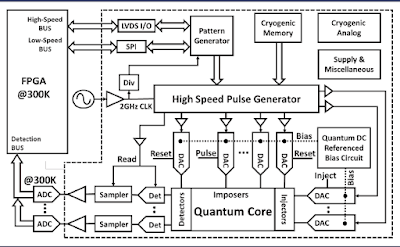

- Microwave pulses are used to train the qubits in the quantum device.

- The QC chip does digital-to-analog and analog-to-digital conversion.

IBM's Q Experience on the Cloud

- In 2016, IBM released a cloud-based 5-qubit gate model quantum computer to enable scientists to experiment with gate model programming.

- The open source Qiskit development kit, as well as a second machine with 16 qubits, were added a year later.

- A collection of instructional resources is available as part of the IBM Q Experience.

Superconducting materials

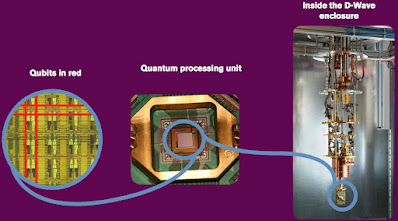

- Superconducting materials, like those employed in the D-Wave computer, must be stored at subzero temperatures, and both photographs show the coverings removed to reveal the quantum chip at the bottom.

- Intel's Tangle Lake gate model quantum processor, featuring a novel design of single-electron transistors linked together, was introduced in 2018.

- At CES 2018, Intel CEO Brian Krzanich demonstrated the processor.

D-Wave Systems

D-Wave Systems in Canada is the only company that provides a "quantum annealing" computer.

- D-Wave computers are massive, chilled computers with up to 2,000 qubits that are utilized for optimization tasks including scheduling, financial analysis, and medical research.

- To solve an issue, annealing is used to identify the best path or the most efficient combination of parameters.

D-Wave Chips have 5,000 qubits in their newest quantum annealing processor.

- A cooling mechanism is required, much as it is for gate type quantum computers.

- It becomes colder all the way down to minus 459 degrees Fahrenheit using liquid nitrogen and liquid helium stages from top to bottom.

Algorithms for Quantum Computing.

Because new algorithms impact the construction of the next generation of quantum architecture, the algorithms for addressing real-world issues must be devised first.

- Both the gate model and the annealing processes have challenges to overcome.

- However, experts anticipate that quantum computing will become commonplace in the near future.

State of Quantum Computing

Quantum computers are projected to eventually factor large numbers and break cryptographic secrets in a couple of seconds.

- It is just a matter of time, according to scientists, until this becomes a reality.

- When it occurs, it will have grave consequences since every encrypted transaction, as well as every current cryptocurrency system, will be exposed to hackers.

- Quantum-safe approaches, on the other hand, are being developed. Quantum secure is one example of this.

The United States, Canada, Germany, France, the United Kingdom, the Netherlands, Russia, China, South Korea, and Japan are the nations that are studying and investing in quantum computing as of 2020.

The field of quantum computing is still in its infancy.

When an eight-ton UNIVAC I in the 1950s developed into a chip decades later, it begs the question of what quantum computers would look like in 50 years.

~ Jai Krishna Ponnappan

You may also want to read more about Quantum Computing here.