Alchemy and Artificial

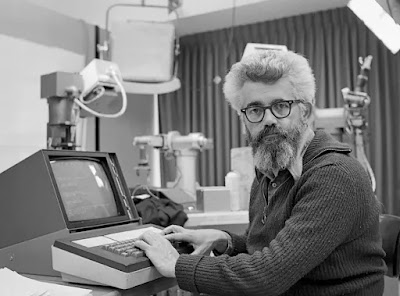

Intelligence, a RAND Corporation paper prepared by Massachusetts Institute of

Technology (MIT) philosopher Hubert Dreyfus and released as a mimeographed memo

in 1965, critiqued artificial intelligence researchers' aims and essential

assumptions.

The paper, which was written when Dreyfus was consulting for

RAND, elicited a significant negative response from the AI community.

Dreyfus had been engaged by RAND, a nonprofit American

global policy think tank, to analyze the possibilities for artificial

intelligence research from a philosophical standpoint.

Researchers like as Herbert Simon and Marvin Minsky, who

predicted in the late 1950s that robots capable of accomplishing whatever

humans could do will exist within decades, made bright forecasts for the future

of AI.

The objective for most AI researchers was not only to

develop programs that processed data in such a manner that the output or

outcome looked to be the result of intelligent activity.

Rather, they wanted to create software that could mimic

human cognitive processes.

Experts in artificial intelligence felt that human cognitive

processes might be used as a model for their algorithms, and that AI could also

provide insight into human psychology.

The work of phenomenologists Maurice Merleau-Ponty, Martin

Heidegger, and Jean-Paul Sartre impacted Dreyfus' thought.

Dreyfus contended in his report that the theory and aims of

AI were founded on associationism, a philosophy of human psychology that

includes a core concept: that thinking happens in a succession of basic,

predictable stages.

Artificial intelligence researchers believed they could use

computers to duplicate human cognitive processes because of their belief in

associationism (which Dreyfus claimed was erroneous).

Dreyfus compared the characteristics of human thinking (as

he saw them) to computer information processing and the inner workings of

various AI systems.

The core of his thesis was that human and machine

information processing processes are fundamentally different.

Computers can only be programmed to handle

"unambiguous, totally organized information," rendering them

incapable of managing "ill-structured material of everyday life," and

hence of intelligence (Dreyfus 1965, 66).

On the other hand, Dreyfus contended that, according to AI

research's primary premise, many characteristics of human intelligence cannot

be represented by rules or associationist psychology.

Dreyfus outlined three areas where humans vary from

computers in terms of information processing: fringe consciousness, insight,

and ambiguity tolerance.

Chess players, for example, utilize the fringe awareness to

decide which area of the board or pieces to concentrate on while making a move.

The human player differs from a chess-playing software in

that the human player does not consciously or subconsciously examine the

information or count out probable plays the way the computer does.

Only after the player has utilized their fringe awareness to

choose which pieces to concentrate on can they consciously calculate the

implications of prospective movements in a manner akin to computer processing.

The (human) problem-solver may build a set of steps for

tackling a complicated issue by understanding its fundamental structure.

This understanding is lacking in problem-solving software.

Rather, as part of the program, the problem-solving method

must be preliminarily established.

The finest example of ambiguity tolerance is in natural

language comprehension, when a word or phrase may have an unclear meaning yet

is accurately comprehended by the listener.

When reading ambiguous syntax or semantics, there are an

endless amount of signals to examine, yet the human processor manages to choose

important information from this limitless domain in order to accurately understand

the meaning.

On the other hand, a computer cannot be trained to search

through all conceivable facts in order to decipher confusing syntax or

semantics.

Either the amount of facts is too huge, or the criteria for

interpretation are very complex.

AI experts chastised Dreyfus for oversimplifying the

difficulties and misrepresenting computers' capabilities.

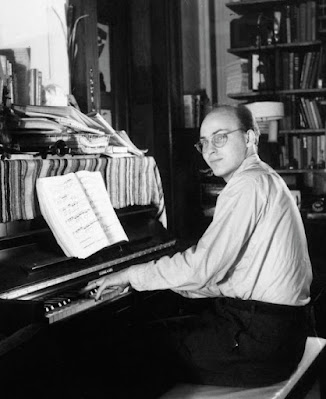

RAND commissioned MIT computer scientist Seymour Papert to respond to the study, which he published in 1968 as The Artificial Intelligence of Hubert L.Dreyfus: A Budget of Fallacies.

Papert also set up a chess match between Dreyfus and Mac

Hack, which Dreyfus lost, much to the amusement of the artificial intelligence

community.

Nonetheless, some of his criticisms in this report and

subsequent books appear to have foreshadowed intractable issues later

acknowledged by AI researchers, such as artificial general intelligence (AGI),

artificial simulation of analog neurons, and the limitations of symbolic

artificial intelligence as a model of human reasoning.

Dreyfus' work was declared useless by artificial

intelligence specialists, who stated that he misinterpreted their research.

Their ire had been aroused by Dreyfus's critiques of AI,

which often used aggressive terminology.

The New Yorker magazine's "Talk of the Town"

section included extracts from the story.

Dreyfus subsequently refined and enlarged his case in What

Computers Can't Do: The Limits of Artificial Intelligence, published in 1972.

~ Jai Krishna Ponnappan

You may also want to read more about Artificial Intelligence here.

See also: Mac Hack; Simon, Herbert A.; Minsky, Marvin.

Further Reading

Crevier, Daniel. 1993. AI: The Tumultuous History of the Search for Artificial Intelligence. New York: Basic Books.

Dreyfus, Hubert L. 1965. Alchemy and Artificial Intelligence. P-3244. Santa Monica, CA: RAND Corporation.

Dreyfus, Hubert L. 1972. What Computers Can’t Do: The Limits of Artificial Intelligence.New York: Harper and Row.

McCorduck, Pamela. 1979. Machines Who Think: A Personal Inquiry into the History and Prospects of Artificial Intelligence. San Francisco: W. H. Freeman.

Papert, Seymour. 1968. The Artificial Intelligence of Hubert L. Dreyfus: A Budget of Fallacies. Project MAC, Memo No. 154. Cambridge, MA: Massachusetts Institute of Technology.