Ray Kurzweil is a futurist and inventor from the United States.

He spent the first half of his career developing the first CCD flat-bed scanner, the first omni-font optical character recognition device, the first print-to-speech reading machine for the blind, the first text-to-speech synthesizer, the first music synthesizer capable of recreating the grand piano and other orchestral instruments, and the first commercially marketed, large-vocabulary speech recognition machine.

He has earned several awards for his contributions to technology, including the Technical Grammy Award in 2015 and the National Medal of Technology.

Kurzweil is the cofounder and chancellor of Singularity University, as well as the director of engineering at Google, where he leads a team that works on artificial intelligence and natural language processing.

Singularity University is a non-accredited graduate school founded on the premise of tackling great issues like renewable energy and space travel by gaining a deep understanding of the opportunities presented by technology progress's current acceleration.

The university, which is headquartered in Silicon Valley, has evolved to include one hundred chapters in fifty-five countries, delivering seminars, educational programs, and business acceleration programs.

While at Google, Kurzweil published the book How to Create a Mind (2012).

He claims that the neo cortex is a hierarchical structure of pattern recognizers in his Pattern Recognition Theory of Mind.

Kurzweil claims that replicating this design in machines might lead to the creation of artificial superintelligence.

He believes that by doing so, he will be able to bring natural language comprehension to Google.

Kurzweil's popularity stems from his work as a futurist.

Futurists are those who specialize in or are interested in the near-to-long-term future and associated topics.

They use well-established methodologies like scenario planning to carefully examine forecasts and construct future possibilities.

Kurzweil is the author of five national best-selling books, including The Singularity Is Near, which was named a New York Times best-seller (2005).

He has an extensive list of forecasts.

Kurzweil predicted the enormous development of international internet usage in the second part of the decade in his debut book, The Age of Intelligent Machines (1990).

He correctly predicted that computers will soon exceed humans in making the greatest investing choices in his second extremely important book, The Age of Spiritual Machines (where "spiritual" stands for "aware"), published in 1999.

Kurzweil prophesied in the same book that computers would one day "appear to have their own free will" and perhaps have "spiritual experiences" (Kurz weil 1999, 6).

Human-machine barriers will dissolve to the point that they will basically live forever as combined human-machine hybrids.

Scientists and philosophers have slammed Kurzweil's forecast of a sentient computer, claiming that awareness cannot be created by calculations.

Kurzweil tackles the phenome non of the Technological Singularity in his third book, The Singularity Is Near.

John von Neumann, a famous mathematician, created the word singularity.

In a 1950s chat with his colleague Stanislaw Ulam, von Neumann proposed that the ever-accelerating speed of technological progress "appears to be reaching some essential singularity in the history of the race beyond which human activities as we know them could not continue" (Ulam 1958, 5).

To put it another way, technological development would alter the course of human history.

Vernor Vinge, a computer scientist, math professor, and science fiction writer, rediscovered the word in 1993 and utilized it in his article "The Coming Technological Singularity." In Vinge's article, technological progress is more accurately defined as an increase in processing power.

Vinge investigates the idea of a self-improving artificial intelligence agent.

According to this theory, the artificial intelligent agent continues to update itself and grow technologically at an unfathomable pace, eventually resulting in the birth of a superintelligence—that is, an artificial intelligence that far exceeds all human intelligence.

In Vinge's apocalyptic vision, robots first become autonomous, then superintelligent, to the point where humans lose control of technology and machines seize control of their own fate.

Machines will rule the planet because technology is more intelligent than humans.

According to Vinge, the Singularity is the end of the human age.

Kurzweil presents an anti-dystopic Singularity perspective.

Kurzweil's core premise is that humans can develop something smarter than themselves; in fact, exponential advances in computer power make the creation of an intelligent machine all but inevitable, to the point that the machine will surpass humans in intelligence.

Kurzweil believes that machine intelligence and human intellect will converge at this moment.

The subtitle of The Singularity Is Near is When Humans Transcend Biology, which is no coincidence.

Kurzweil's overarching vision is based on discontinuity: no lesson from the past, or even the present, can aid humans in determining the way to the future.

This also explains why new types of education, such as Singularity University, are required.

Every sentimental look back to history, every memory of the past, renders humans more susceptible to technological change.

With the arrival of a new superintelligent, almost immortal race, history as a human construct will soon come to an end.

Posthumans, the next phase in human development, are known as immortals.

Kurzweil believes that posthumanity will be made up of sentient robots rather than people with mechanical bodies.

He claims that the future should be formed on the assumption that mankind is in the midst of an extraordinary period of technological advancement.

The Singularity, he believes, would elevate humanity beyond its wildest dreams.

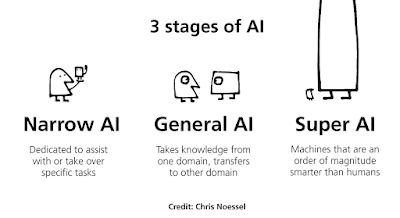

While Kurzweil claims that artificial intelligence is now outpacing human intellect on certain activities, he also acknowledges that the moment of superintelligence, often known as the Technological Singularity, has not yet arrived.

He believes that individuals who embrace the new age of human-machine synthesis and are daring to go beyond evolution's boundaries would view humanity's future as positive.

You may also want to read more about Artificial Intelligence here.

See also:

General and Narrow AI; Superintelligence; Technological Singularity.

Further Reading:

Kurzweil, Ray. 1990. The Age of Intelligent Machines. Cambridge, MA: MIT Press.

Kurzweil, Ray. 1999. The Age of Spiritual Machines: When Computers Exceed Human Intelligence. New York: Penguin.

Kurzweil, Ray. 2005. The Singularity Is Near: When Humans Transcend Biology. New York: Viking.

Ulam, Stanislaw. 1958. “Tribute to John von Neumann.” Bulletin of the American Mathematical Society 64, no. 3, pt. 2 (May): 1–49.

Vinge, Vernor. 1993. “The Coming Technological Singularity: How to Survive in the Post-Human Era.” In Vision 21: Interdisciplinary Science and Engineering in the Era of Cyberspace, 11–22. Cleveland, OH: NASA Lewis Research Center.