Emergent

gameplay occurs when a player in a video game encounters complicated scenarios

as a result of their interactions with other players in the game.

Players may fully immerse themselves in an intricate and

realistic game environment and feel the consequences of their choices in

today's video games.

Players may personalize and build their character and tale.

Players take on the role of a cyborg in a dystopian

metropolis in the Deus Ex series (2000), for example, one of the first emergent

game play systems.

They may change the physical appearance of their character

as well as their skill sets, missions, and affiliations.

Players may choose between militarized adaptations that

allow for more aggressive play and stealthier options.

The plot and experience are altered by the choices made on

how to customize and play, resulting in unique challenges and results for each

player.

When players interact with other characters or items, emergent gameplay guarantees that the game environment reacts.

Because of many options, the tale unfolds in surprising ways

as the gaming world changes.

Specific outcomes are not predetermined by the designer, and

emergent gameplay can even take advantage of game flaws to generate actions in

the game world, which some consider to be a form of emergence.

Artificial intelligence has become more popular among game

creators in order to have the game environment respond to player actions in a

timely manner.

Artificial intelligence aids the behavior of video

characters and their interactions via the use of algorithms, basic rule-based

forms that help in generating the game environment in sophisticated ways.

"Game AI" refers to the usage of artificial

intelligence in games.

The most common use of AI algorithms is to construct the

form of a non-player character (NPC), which are characters in the game world

with whom the player interacts but does not control.

In its most basic form, AI will use pre-scripted actions for the characters, who will then concentrate on reacting to certain events.

Pre-scripted character behaviors performed by AI are fairly

rudimentary, and NPCs are meant to respond to certain "case" events.

The NPC will evaluate its current situation before

responding in a range determined by the AI algorithm.

Pac-Man is a good early and basic illustration of this

(1980).

Pac-Man is controlled by the player through a labyrinth

while being pursued by a variety of ghosts, who are the game's non-player

characters.

Players could only interact with ghosts (NPCs) by moving

about; ghosts had limited replies and their own AI-programmed pre-scripted

movement.

The AI planned reaction would occur if the ghost ran into a

wall.

It would then roll an AI-created die that would determine

whether or not the NPC would turn toward or away from the direction of the

player.

If the NPC decided to go after the player, the AI

pre-scripted pro gram would then detect the player’s location and turn the

ghost toward them.

If the NPC decided not to go after the player, it would turn

in an opposite or a random direction.

This NPC interaction is very simple and limited; however,

this was an early step in AI providing emergent gameplay.

Contemporary games provide a variety of options available and a much larger set of possible interactions for the player.

Players in contemporary role-playing games (RPGs) are given

an incredibly high number of potential options, as exemplified by Fallout 3

(2008) and its sequels.

Fallout is a role-playing game, where the player takes on

the role of a survivor in a post-apocalyptic America.

The story narrative gives the player a goal with no

direction; as a result, the player is given the freedom to play as they see

fit.

The player can punch every NPC, or they can talk to them

instead.

In addition to this variety of actions by the player, there

are also a variety of NPCs controlled through AI.

Some of the NPCs are key NPCs, which means they have their

own unique scripted dialogue and responses.

This provides them with a personality and provides a

complexity through the use of AI that makes the game environment feel more

real.

When talking to key NPCs, the player is given options for what

to say, and the Key NPCs will have their own unique responses.

This differs from the background character NPCs, as the key NPCs are supposed to respond in such a way that it would emulate interaction with a real personality.

These are still pre-scripted responses to the player, but

the NPC responses are emergent based on the possible combination of the

interaction.

As the player makes decisions, the NPC will examine this

decision and decide how to respond in accordance to its script.

The NPCs that the players help or hurt and the resulting

interactions shape the game world.

Game AI can emulate personalities and present emergent

gameplay in a narrative setting; however, AI is also involved in challenging

the player in difficulty settings.

A variety of pre-scripted AI can still be used to create

difficulty.

Pre scripted AI are often made to make suboptimal decisions

for enemy NPCs in games where players fight.

This helps make the game easier and also makes the NPCs seem

more human.

Suboptimal pre-scripted decisions make the enemy NPCs easier

to handle.

Optimal decisions however make the opponents far more

difficult to handle.

This can be seen in contemporary games like Tom Clancy’s The

Division (2016), where players fight multiple NPCs.

The enemy NPCs range from angry rioters to fully trained

paramilitary units.

The rioter NPCs offer an easier challenge as they are not

trained in combat and make suboptimal decisions while fighting the player.

The military trained NPCs are designed to have more optimal

decision-making AI capabilities in order to increase the difficulty for the

player fighting them.

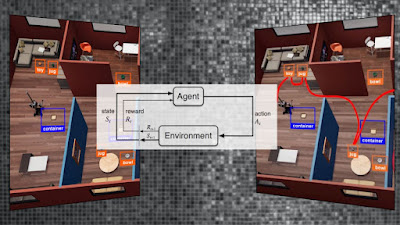

Emergent gameplay has evolved to its full potential through use of adaptive AI.

Similar to prescript AI, the character examines a variety of

variables and plans about an action.

However, unlike the prescript AI that follows direct

decisions, the adaptive AI character will make their own decisions.

This can be done through computer-controlled learning.

AI-created NPCs follow rules of interactions with the

players.

As players go through the game, the player interactions are

analyzed, and some AI judgments become more weighted than others.

This is done in order to provide distinct player

experiences.

Various player behaviors are actively examined, and

modifications are made by the AI when designing future challenges.

The purpose of the adaptive AI is to challenge the players

to a degree that the game is fun while not being too easy or too challenging.

Difficulty may still be changed if players seek a different

challenge.

This may be observed in the Left 4 Dead game (2008) series’

AI Director.

Players navigate through a level, killing zombies and

gathering resources in order to live.

The AI Director chooses which zombies to spawn, where they

will spawn, and what supplies will be spawned.

The choice to spawn them is not made at random; rather, it

is based on how well the players performed throughout the level.

The AI Director makes its own decisions about how to

respond; as a result, the AI Director adapts to the level's player success.

The AI Director gives less resources and spawns more

adversaries as the difficulty level rises.

Changes in emergent gameplay are influenced by advancements in simulation and game world design.

As virtual reality technology develops, new technologies

will continue to help in this progress.

Virtual reality games provide an even more immersive gaming

experience.

Players may use their own hands and eyes to interact with

the environment.

Computers are growing more powerful, allowing for more

realistic pictures and animations to be rendered.

Adaptive AI demonstrates the capability of really autonomous

decision-making, resulting in a truly participatory gaming experience.

Game makers are continuing to build more immersive

environments as AI improves to provide more lifelike behavior.

These cutting-edge technology and new AI will elevate

emergent gameplay to new heights.

The importance of artificial intelligence in videogames has

emerged as a crucial part of the industry for developing realistic and engrossing

gaming.

You may also want to read more about Artificial Intelligence here.

See also:

Brooks, Rodney; Distributed and Swarm Intelligence; General and Narrow AI.

Further Reading:

Brooks, Rodney. 1986. “A Robust Layered Control System for a Mobile Robot.” IEEE Journal of Robotics and Automation 2, no. 1 (March): 14–23.

Brooks, Rodney. 1990. “Elephants Don’t Play Chess.” Robotics and Autonomous Systems6, no. 1–2 (June): 3–15.

Brooks, Rodney. 1991. “Intelligence Without Representation.” Artificial Intelligence Journal 47: 139–60.

Dennett, Daniel C. 1997. “Cog as a Thought Experiment.” Robotics and Autonomous Systems 20: 251–56.

Gallagher, Shaun. 2005. How the Body Shapes the Mind. Oxford: Oxford University Press.

Pfeifer, Rolf, and Josh Bongard. 2007. How the Body Shapes the Way We Think: A New View of Intelligence. Cambridge, MA: MIT Press.