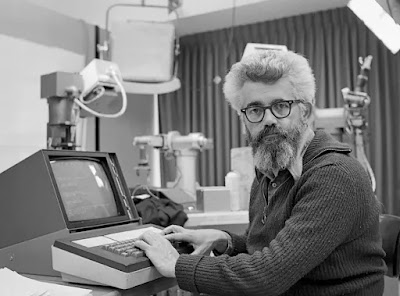

Hans Moravec(1948–) is

well-known in the computer science community as the long-time head of Carnegie

Mellon University's Robotics Institute and an unashamed techno logical

optimist.

For the last twenty-five years, he has studied and produced

artificially intelligent robots at the CMU lab, where he is still an adjunct

faculty member.

Moravec spent almost 10 years as a research assistant at

Stanford University's groundbreaking Artificial Intelligence Lab before coming

to Carnegie Mellon.

Moravec is also noted for his paradox, which states that,

contrary to popular belief, it is simple to program high-level thinking skills

into robots—as with chess or Jeopardy!—but difficult to transmit sensorimo tor

agility.

Human sensory and motor abilities have developed over

millions of years and seem to be easy, despite their complexity.

Higher-order cognitive abilities, on the other hand, are the

result of more recent cultural development.

Geometry, stock market research, and petroleum engineering

are examples of disciplines that are difficult for people to learn but easier

for robots to learn.

"The basic lesson of thirty-five years of AI research

is that the hard issues are simple, and the easy ones are hard," writes

Steven Pinker of Moravec's scientific career.

Moravec built his first toy robot out of scrap metal when he

was eleven years old, and his light-following electronic turtle and a robot

operated by punched paper tape earned him two high school science fair honors.

He proposed a Ship of Theseus-like analogy for the viability

of artificial brains while still in high school.

Consider replacing a person's human neurons one by one with

precisely manufactured equivalents, he said.

When do you think human awareness will vanish? Is anybody

going to notice? Is it possible to establish that the person is no longer

human? Later in his career, Moravec would suggest that human knowledge and

training might be broken down in the same manner, into subtasks that machine

intelligences could take over.

Moravec's master's thesis focused on the development of a

computer language for artificial intelligence, while his PhD research focused

on the development of a robot that could navigate obstacle courses utilizing

spatial representation methods.

The area of interest (ROI) in a scene was identified by

these robot vision systems.

Moravec's early computer vision robots were extremely

sluggish by today's standards, taking around five hours to go from one half of

the facility to the other.

To measure distance and develop an internal picture of

physical impediments in the room, a remote computer carefully analysed

continuous video-camera images recorded by the robot from various angles.

Moravec finally developed 3D occupancy grid technology,

which allowed a robot to create an awareness of a cluttered area in a matter of

seconds.

Moravec's lab took on a new challenge by converting a

Pontiac TransSport minivan into one of the world's first road-ready autonomous

cars.

The self-driving minivan reached speeds of up to 60 miles

per hour.

DANTE II, a robot capable of going inside the crater of an

active volcano on Mount Spurr in Alaska, was also constructed by the CMU

Robotics Institute.

While DANTE II's immediate aim was to sample harmful

fumarole gases, a job too perilous for humans, it was also planned to

demonstrate technologies for robotic expeditions to distant worlds.

The volcanic explorer robot used artificial intelligence to

navigate the perilous, boulder-strewn terrain on its own.

Because such rovers produced so much visual and other

sensory data that had to be analyzed and managed, Moravec believes that

experience with mobile robots spurred the development of powerful artificial

intelligence and computer vision methods.

For the National Aeronautics and Space Administration

(NASA), Moravec's team built fractal branching ultra-dexterous robots

("Bush robots") in the 1990s.

These robots, which were proposed but never produced due to

the lack of necessary manufacturing technologies, comprised of a branching

hierarchy of dynamic articulated limbs, starting with a main trunk and

splitting down into smaller branches.

As a result, the Bush robot would have "hands" at

all scales, from macroscopic to tiny.

The tiniest fingers would be nanoscale in size, allowing

them to grip very tiny objects.

Moravec said the robot would need autonomy and depend on

artificial intelligence agents scattered throughout the robot's limbs and

branches because to the intricacy of manipulating millions of fingers in real

time.

He believed that the robots may be made entirely of carbon

nanotube material, employing the quick prototyping technology known as 3D

printers.

Moravec believes that artificial intelligence will have a

significant influence on human civilization.

To stress the role of AI in change, he coined the concept of

the "landscape of human capability," which physicist Max Tegmark has

later converted into a graphic depiction.

Moravec's picture depicts a three-dimensional environment in

which greater altitudes reflect more challenging jobs in terms of human

difficulty.

The point where the swelling waters meet the shore reflects

the line where robots and humans both struggle with the same duties.

Art, science, and literature are now beyond of grasp for an

AI, but the sea has already defeated mathematics, chess, and the game Go.

Language translation, autonomous driving, and financial

investment are all on the horizon.

More controversially, in two popular books, Mind Children

(1988) and Robot: Mere Machine to Transcendent Mind (1989), Moravec engaged in

future conjecture based on what he understood of developments in artificial

intelligence research (1999).

In 2040, he said, human intellect will be surpassed by

machine intelligence, and the human species would go extinct.

Moravec evaluated the functional equivalence between 50,000

million instructions per second (50,000 MIPS) of computer power and a gram of

brain tissue and came up with this figure.

He calculated that home computers in the early 2000s equaled

only an insect's nervous system, but that if processing power doubled every

eighteen months, 350 million years of human intellect development could be

reduced to just 35 years of artificial intelligence advancement.

He estimated that a hundred million MIPS would be required

to create human-like universal robots.

Moravec refers to these sophisticated robots as our

"mind children" in the year 2040.

Humans, he claims, will devise techniques to delay

biological civilization's final demise.

Moravec, for example, was the first to anticipate what is

now known as universal basic income, which is delivered by benign artificial

superintelligences.

In a completely automated society, a basic income system

would provide monthly cash payments to all individuals without any type of

employment requirement.

Moravec is more concerned about the idea of a renegade

automated corporation breaking its programming and refusing to pay taxes into

the human cradle-to-grave social security system than he is about technological

unemployment.

Nonetheless, he predicts that these "wild"

intelligences will eventually control the universe.

Moravec has said that his books Mind Children and Robot may

have had a direct impact on the last third of Stanley Kubrick's original screenplay

for A.I. Artificial Intelligence (later filmed by Steven Spielberg).

Moravecs, on the other hand, are self-replicating devices in

the science fiction books Ilium and Olympos.

Moravec defended the same physical fundamentalism he

expressed in his high school thoughts throughout his life.

He contends in his most transhumanist publications that the

only way for humans to stay up with machine intelligences is to merge with them

by replacing sluggish human cerebral tissue with artificial neural networks

controlled by super-fast algorithms.

In his publications, Moravec has blended the ideas of

artificial intelligence with virtual reality simulation.

He's come up with four scenarios for the development of

consciousness.

(1) human brains in the physical world,

(2) a programmed AI

implanted in a physical robot,

(3) a human brain immersed in a virtual reality

simulation, and

(4) an AI functioning inside the boundaries of virtual reality

All of them are equally credible depictions of reality, and they are as

"real" as we believe them to be.

Moravec is the creator and chief scientist of the

Pittsburgh-based Seegrid Corporation, which makes autonomous Robotic Industrial

Trucks that can navigate warehouses and factories without the usage of

automated guided vehicle systems.

A human trainer physically pushes Seegrid's vehicles through

a new facility once.

The robot conducts the rest of the job, determining the most

efficient and safe pathways for future journeys, while the trainer stops at the

appropriate spots for the truck to be loaded and unloaded.

Seegrid VGVs have transported over two million production

miles and eight billion pounds of merchandise for DHL, Whirlpool, and Amazon.

Moravec was born in the Austrian town of Kautzen.

During World War II, his father was a Czech engineer who

sold electrical products.

When the Russians invaded Czechoslovakia in 1944, the family

moved to Austria.

In 1953, his family relocated to Canada, where he now

resides.

Moravec earned a bachelor's degree in mathematics from

Acadia University in Nova Scotia, a master's degree in computer science from

the University of Western Ontario, and a doctorate from Stanford University,

where he worked with John McCarthy and Tom Binford on his thesis.

The Office of Naval Study, the Defense Advanced Research

Projects Agency, and NASA have all supported his research.

Elon Musk (1971–) is an American businessman and inventor.

Elon Musk is an engineer, entrepreneur, and inventor who was

born in South Africa.

He is a dual citizen of South Africa, Canada, and the United

States, and resides in California.

Musk is widely regarded as one of the most prominent

inventors and engineers of the twenty-first century, as well as an important

influencer and contributor to the development of artificial intelligence.

Despite his controversial personality, Musk is widely

regarded as one of the most prominent inventors and engineers of the

twenty-first century and an important influencer and contributor to the

development of artificial intelligence.

Musk's business instincts and remarkable technological

talent were evident from an early age.

By the age of 10, he had self-taught himself how program

computers, and by the age of twelve, he had produced a video game and sold the

source code to a computer maga zine.

Musk has included allusions to some of his favorite novels

in SpaceX's Falcon Heavy rocket launch and Tesla's software since he was a

youngster.

Musk's official schooling was centered on economics and

physics rather than engineering, interests that are mirrored in his subsequent

work, such as his efforts in renewable energy and space exploration.

He began his education at Queen's University in Canada, but

later transferred to the University of Pennsylvania, where he earned bachelor's

degrees in Economics and Physics.

Musk barely stayed at Stanford University for two days to

seek a PhD in energy physics before departing to start his first firm, Zip2,

with his brother Kimbal Musk.

Musk has started or cofounded many firms, including three

different billion-dollar enterprises: SpaceX, Tesla, and PayPal, all driven by

his diverse interests and goals.

• Zip2 was a web software business that was eventually

purchased by Compaq.

• X.com: an online bank that merged with PayPal to become

the online payments corporation PayPal.

• Tesla, Inc.: an electric car and solar panel maker •

SpaceX: a commercial aircraft manufacturer and space transportation services

provider (via its subsidiarity SolarCity) • Neuralink: a neurotechnology

startup focusing on brain-computer connections • The Boring Business: an

infrastructure and tunnel construction corporation • OpenAI: a nonprofit AI

research company focused on the promotion and development of friendly AI Musk

is a supporter of environmentally friendly energy and consumption.

Concerns over the planet's future habitability prompted him

to investigate the potential of establishing a self-sustaining human colony on

Mars.

Other projects include the Hyperloop, a high-speed

transportation system, and the Musk electric jet, a jet-powered supersonic

electric aircraft.

Musk sat on President Donald Trump's Strategy and Policy

Forum and Manufacturing Jobs Initiative for a short time before stepping out

when the US withdrew from the Paris Climate Agreement.

Musk launched the Musk Foundation in 2002, which funds and

supports research and activism in the domains of renewable energy, human space

exploration, pediatric research, and science and engineering education.

Musk's effect on AI is significant, despite his best-known

work with Tesla and SpaceX, as well as his contentious social media

pronouncements.

In 2015, Musk cofounded the charity OpenAI with the

objective of creating and supporting "friendly AI," or AI that is

created, deployed, and utilized in a manner that benefits mankind as a whole.

OpenAI's objective is to make AI open and accessible to the

general public, reducing the risks of AI being controlled by a few privileged

people.

OpenAI is especially concerned about the possibility of

Artificial General Intelligence (AGI), which is broadly defined as AI capable

of human-level (or greater) performance on any intellectual task, and ensuring

that any such AGI is developed responsibly, transparently, and distributed

evenly and openly.

OpenAI has had its own successes in taking AI to new levels

while staying true to its goals of keeping AI friendly and open.

In June of 2018, a team of OpenAI-built robots defeated a

human team in the video game Dota 2, a feat that could only be accomplished

through robot teamwork and collaboration.

Bill Gates, a cofounder of Microsoft, praised the

achievement on Twitter, calling it "a huge milestone in advancing

artificial intelligence" (@BillGates, June 26, 2018).

Musk resigned away from the OpenAI board in February 2018 to

prevent any conflicts of interest while Tesla advanced its AI work for

autonomous driving.

Musk became the CEO of Tesla in 2008 after cofounding the

company in 2003 as an investor.

Musk was the chairman of Tesla's board of directors until

2018, when he stepped down as part of a deal with the US Securities and

Exchange Commission over Musk's false claims about taking the company private.

Tesla produces electric automobiles with self-driving

capabilities.

Tesla Grohmann Automation and Solar City, two of its

subsidiaries, offer relevant automotive technology and manufacturing services

and solar energy services, respectively.

Tesla, according to Musk, will reach Level 5 autonomous

driving capabilities in 2019, as defined by the National Highway Traffic Safety

Administration's (NHTSA) five levels of autonomous driving.

Tes la's aggressive development with autonomous driving has

influenced conventional car makers' attitudes toward electric cars and

autonomous driving, and prompted a congressional assessment of how and when the

technology should be regulated.

Musk is widely credited as a key influencer in moving the

automotive industry toward autonomous driving, highlighting the benefits of

autonomous vehicles (including reduced fatalities in vehicle crashes, increased

worker productivity, increased transportation efficiency, and job creation) and

demonstrating that the technology is achievable in the near term.

Tesla's autonomous driving code has been created and

enhanced under the guidance of Musk and Tesla's Director of AI, Andrej Karpathy

(Autopilot).

The computer vision analysis used by Tesla, which includes

an array of cameras on each car and real-time image processing, enables the

system to make real-time observations and predictions.

The cameras, as well as other exterior and internal sensors,

capture a large quantity of data, which is evaluated and utilized to improve

Autopilot programming.

Tesla is the only autonomous car maker that is opposed to

the LIDAR laser sensor (an acronym for light detection and ranging).

Tesla uses cameras, radar, and ultrasonic sensors instead.

Though academics and manufacturers disagree on whether LIDAR

is required for fully autonomous driving, the high cost of LIDAR has limited

Tesla's rivals' ability to produce and sell vehicles at a pricing range that

allows a large number of cars on the road to gather data.

Tesla is creating its own AI hardware in addition to its AI

programming.

Musk stated in late 2017 that Tesla is building its own

silicon for artificial-intelligence calculations, allowing the company to

construct its own AI processors rather than depending on third-party sources

like Nvidia.

Tesla's AI progress in autonomous driving has been marred by

setbacks.

Tesla has consistently missed self-imposed deadlines, and

serious accidents have been blamed on flaws in the vehicle's Autopilot mode,

including a non-injury accident in 2018, in which the vehicle failed to detect

a parked firetruck on a California freeway, and a fatal accident in 2018, in

which the vehicle failed to detect a pedestrian outside a crosswalk.

Neuralink was established by Musk in 2016.

With the stated objective of helping humans to keep up with

AI breakthroughs, Neuralink is focused on creating devices that can be

implanted into the human brain to better facilitate communication between the

brain and software.

Musk has characterized the gadgets as a more efficient

interface with computer equipment, while people now operate things with their

fingertips and voice commands, directives would instead come straight from the

brain.

Though Musk has made major advances to AI, his

pronouncements regarding the risks linked with AI have been apocalyptic.

Musk has called AI "humanity's greatest existential

danger" and "the greatest peril we face as a civilisation"

(McFarland 2014).

(Morris 2017).

He cautions against the perils of power concentration, a

lack of independent control, and a competitive rush to acceptance without

appropriate analysis of the repercussions.

While Musk has used colorful terminology such as

"summoning the devil" (McFarland 2014) and depictions of cyborg

overlords, he has also warned of more immediate and realistic concerns such as

job losses and AI-driven misinformation campaigns.

Though Musk's statements might come out as alarmist, many

important and well-respected figures, including as Microsoft cofounder Bill

Gates, Swedish-American scientist Max Tegmark, and the late theoretical

physicist Stephen Hawking, share his concern.

Furthermore, Musk does not call for the cessation of AI

research.

Instead, Musk supports for responsible AI development and

regulation, including the formation of a Congressional committee to spend years

studying AI with the goal of better understanding the technology and its

hazards before establishing suitable legal limits.

~ Jai Krishna Ponnappan

Find Jai on Twitter | LinkedIn | Instagram

You may also want to read more about Artificial Intelligence here.

See also:

Superintelligence; Technological Singularity; Workplace Automation.

References & Further Reading:

Moravec, Hans. 1988. Mind Children: The Future of Robot and Human Intelligence. Cambridge, MA: Harvard University Press.

Moravec, Hans. 1999. Robot: Mere Machine to Transcendent Mind. Oxford, UK: Oxford University Press.

Moravec, Hans. 2003. “Robots, After All.” Communications of the ACM 46, no. 10 (October): 90–97.

Pinker, Steven. 2007. The Language Instinct: How the Mind Creates Language. New York: Harper.