In the late 1950s, a paradigm-shattering movement known as the "Cognitive Revolution" saw American experimental psychologists break away from behaviorism—the theory that "training" explains human and animal behavior.

This means adopting a computational or information-processing theory of mind in psychology.

Task performance, according to cognitive psychology, requires decision-making and problem-solving procedures based on stored and immediate perception, as well as conceptual knowledge.

In meaning-making activities, signs and symbols encoded in the human nervous system from contacts in the environment are stored in internal memory and matched against future events.

Humans are skilled in seeking and finding patterns, according to this viewpoint.

Every detail of a person's life is stored in their memory.

Recognition is the process of matching current perceptions with previously stored information representations.

From about the 1920s through the 1950s, behaviorism dominated mainstream American psychology.

The hypothesis proposed that learning of connections via conditioning, which involves inputs and reactions rather than thoughts or emotions, could explain most human and animal behavior.

Under behaviorism, it was assumed that the best way to treat disorders was to change one's behavior patterns.

The new ideas and theories that challenged the behaviorist viewpoint originated mostly from outside of psychology.

Signal processing and communications research, notably the "information theory" work of Claude Shannon at Bell Labs in the 1940s, had major effect on the discipline.

Shannon claimed that information flow may be used to describe human vision and memory.

In this approach, cognition may be seen as an information processing phenomena.

Donald Broadbent, who wrote in Perception and Communication (1958) that humans have a limited capacity to process an overwhelming amount of available information and thus must apply a selective filter to received stimuli, was one of the first proponents of a psychological theory of information processing.

Short-term memory receives the information that goes through the filter, which is then altered before being transmitted and stored in long-term memory.

His model's analogies are mechanical rather than behavioral.

Other psychologists, particularly mathematical psychologists, were inspired by this idea, believing that quantifying information in bits may assist quantify the science of memory.

Psychologists were also influenced by the invention of the digital computer in the 1940s.

Soon after WWII ended, critics and scientists alike began equating computers to human intelligence, with mathematicians Edmund Berkeley, author of Giant Brains, or Machines That Think (1949), and Alan Turing, in his article "Computing Machinery and Intelligence," being the most prominent (1950).

Early artificial intelligence researchers like Allen Newell and Herbert Simon were motivated by works like these to develop computer systems that could solve problems in a human-like manner.

Mental representations might be treated as data structures, and human information processing as programming, according to computer modeling.

These concepts may still be found in cognitive psychology.

A third source of ideas for cognitive psychology comes from linguistics, namely Noam Chomsky's generative linguistics method.

Syntactic Structures, published in 1957, explained the mental structures required to support and express the information that language speakers must possess.

To turn one syntactic structure into another, he proposed that transformational grammar components be included.

In 1959, Chomsky authored a critique of B. F. Skinner's book Verbal Behavior, which is credited with destroying behaviorism as a serious scientific approach to psychology.

In psychology, Jerome Bruner, Jacqueline Goodnow, and George Austin created the notion of concept attainment in their book A Study of Thinking (1956), which was especially well suited to the information processing approach to psychology.

They finally agreed that concept learning included "the search for and cataloguing of traits that may be utilized to differentiate exemplars from non-exemplars of distinct categories" (Bruner et al. 1967).

Under the guidance of Bruner and George Miller, Harvard University established a Center for Cognitive Studies in 1960, which formalized the Cognitive Revolution.

Cognitive psychology produced significant contributions to cognitive science in the 1960s, especially in the areas of pattern recognition, attention and memory, and the psychological theory of languages (psycholinguistics).

Pattern recognition was simplified to the perception of very simple characteristics (graphics primitives) and a matching procedure in which the primitives were matched to items stored in visual memory.

Information processing theories of attention and memory emerged in the 1960s as well.

The Atkinson and Shiffrin model, which developed a mathematical model of information as it moved from short-term to long-term memory, following rules for encoding, storage, and retrieval that governed the flow, is perhaps the most well-known.

Information was characterized as being lost from storage due to interference or decay.

Those who wished to find out how Chomsky's ideas of language worked in practice gave birth to the area of psycholinguistics.

Psycholinguistics utilizes many of the methods of cognitive psychology.

The use of reaction time in perceptual-motor tasks to infer the content, length, and temporal sequencing of cognitive activities is known as mental chronometry.

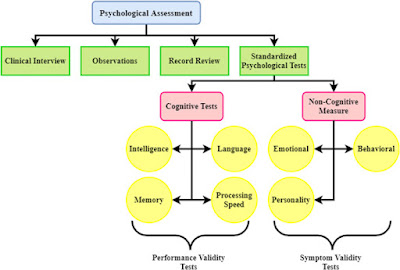

Processing speed is used as a measure of processing efficiency.

Participants in one well-known research were given questions such, "Is a robin a bird?" "Is a robin an animal?" and "Is a robin an animal?" The larger the categorical difference between the words, the longer it took the responder to react.

The researchers demonstrated how semantic models might be hierarchical by showing how the idea robin is directly related to bird and indirectly connected to animal via the notion of bird.

By passing through "bird," information flows from "robin" to "animal." Memory and language studies began to cross in the 1970s and 1980s, when artificial intelligence researchers and philosophers debated proposed representations of visual imagery.

As a result, cognitive psychology has become considerably more multidisciplinary.

In connectionism and cognitive neuroscience, two new study avenues have emerged.

To find brain models of emergent linkages, nodes, and linked networks, connectionism combines cognitive psychology, artificial intelligence, neuroscience, and philosophy of mind.

Connectionism (also known as "parallel distributed processing" or "neural networking") is fundamentally computational.

Artificial neural networks are based on the perception and cognition of human brains.

Cognitive neuroscience is a branch of study that investigates the nervous system's role in cognition.

The areas of cognitive psychology, neurobiology, and computational neuroscience collide in this project.

~ Jai Krishna Ponnappan

You may also want to read more about Artificial Intelligence here.

See also:

Macy Conferences.

Further Reading

Bruner, Jerome S., Jacqueline J. Goodnow, and George A. Austin. 1967. A Study of Thinking. New York: Science Editions.

Gardner, Howard. 1986. The Mind’s New Science: A History of the Cognitive Revolution. New York: Basic Books.

Lachman, Roy, Janet L. Lachman, and Earl C. Butterfield. 2015. Cognitive Psychology and Information Processing: An Introduction. London: Psychology Press.

Miller, George A. 1956. “The Magical Number Seven, Plus or Minus Two: Some Limits on Our Capacity for Processing Information.” Psychological Review 63, no. 2: 81–97.

Pinker, Steven. 1997. How the Mind Works. New York: W. W. Norton.